Natural Language Processing (NLP) has come a long way in recent years, thanks to advancements in machine learning and artificial intelligence. One of the most exciting developments in this field is the emergence of Large Language Models (LLMs). These models are capable of processing vast amounts of text data and generating human-like responses to queries. They have already shown great promise in various applications such as chatbots, language translation, and content creation. In this blog section, we will explore what LLMs are, how they work, and their potential impact on NLP.

We will also discuss some of the challenges that come with using these models and how researchers are working to overcome them. Join us as we delve into the fascinating world of Large Language Models and discover their role in shaping the future of natural language processing.

What are Large Language Models?

Large language models, or LLMs in short, are a type of machine learning model that are trained on massive amounts of text data. By doing so, they can then be used to generate human-like text, complete various language tasks, and even better understand human language.

One example of a LLM is the transformer model, which has been heavily used in recent years due to its exceptional performance in generating text that is almost indistinguishable from human-written text. However, training large language models is not an easy task, as it requires massive amounts of data and computing resources.

Nevertheless, the benefits of leveraging these models for natural language processing (NLP) applications are enormous, as they are capable of transforming the way we interact with language and information. In the next sections, we will explore the potential of LLMs in NLP, as well as the challenges and benefits of using them.

The Potential of Large Language Models in Natural Language Processing (NLP)

Large language models (LLMs) have revolutionized the field of natural language processing (NLP) by unlocking the potential for machines to not only understand but also generate human-like language. One notable example of this is OpenAI’s GPT-3 (Generative Pre-trained Transformer 3) model, which can generate convincing text that is difficult to distinguish from human-written content. LLMs are trained on massive amounts of data from diverse sources, allowing them to learn the nuances of language and how it is used in various contexts. Moreover, these models can be fine-tuned to adapt to specific NLP tasks, such as language translation, summarization, or sentiment analysis. However, training such models comes with challenges, from the enormous amount of computing resources needed to the ethical issues surrounding the content used for training.

Challenges with Training and Deploying Large Language Models

Challenges with training and deploying Large Language Models (LLMs) have been a major concern in the field of Natural Language Processing (NLP) in recent years.

- Accessing necessary resources for training LLMs due to huge amounts of data required

- High cost associated with training LLMs

- Significant computational power required for deployed LLMs, which can be a barrier to widespread deployment

- Ensuring that the training data accurately represents the tasks that the model will be deployed to perform

- Expensive maintenance of LLMs due to their complexity and size, including regular updates and fine-tuning

- Addressing these challenges is crucial for successful deployment and realizing the potential of LLMs in NLP.

Benefits of Leveraging Large Language Models for NLP Applications

One of the main benefits of using LLMs is their ability to generate text with high accuracy and fluency. For example, the famous GPT-3 model can generate articles, poems, and even computer code that are very similar to those written by humans.

Another benefit of LLMs is that they can be trained on large amounts of data and then used to perform various NLP tasks. This means that instead of training a separate model for each task, you can have one model that can perform multiple tasks. This is known as transfer learning and has been shown to improve the performance of NLP models.

Fortunately, recent advances in machine learning and the development of new LLM architectures such as Transformers have made it easier to train and use LLMs for NLP applications. Overall, leveraging LLMs for NLP applications can lead to better performance, more efficient use of resources, and faster deployment of new NLP solutions.

What are Large Language Models used for

As we have already mention, the artificial inteligence of LLMs can process and generate human-like language. These models have revolutionized the field of natural language processing by enabling machines to understand and respond to human language in a more sophisticated way than ever before. One example of an LLM is Generative AI, which uses state-of-the-art deep learning techniques to generate high-quality text.

Generative AI works by training on massive amounts of text data, such as books, articles, and websites. The model then learns to identify patterns and relationships between words, phrases, and sentences. This allows it to generate new text that is grammatically correct, coherent, and sometimes even creative.

Examples

Having trained an LLM, a foundation is established for utilizing AI in practical applications. With the aid of prompts to query the LLM, responses can be generated through model inference that may take various forms such as answers to questions, newly created text or images and summarized content. This process provides immense potential for enhancing productivity and efficiency across numerous industries.

- Text generation. The ability to generate text on any topic that the LLM has been trained on is a primary use case.

- Translation. For LLMs trained on multiple languages, the ability to translate from one language to another is a common feature.

- Content summary. Summarizing blocks or multiple pages of text is a useful function of LLMs.

- Rewriting content. Rewriting a section of text is another capability.

- Classification and categorization. An LLM is able to classify and categorize content.

- Sentiment analysis. Most LLMs can be used for sentiment analysis to help users to better understand the intent of a piece of content or a particular response.

- Conversational AI and chatbots. LLMs can enable a conversation with a user in a way that is typically more natural than older generations of AI technologies.

Different types of large language models (LLM)

They have become increasingly popular in recent years due to their ability to perform a wide range of natural language processing tasks, such as text classification, sentiment analysis, and machine translation. There are several types of LLMs available today, each with its own unique characteristics and applications. In this article, we will explore the different types of large language models and their respective strengths and weaknesses.

1. GPT (Generative Pre-trained Transformer) models: developed by OpenAI. This model has been praised for its ability to perform tasks such as writing text, answering questions, and even creating original poetry. These are a type of language model that uses transformer-based architecture and is pre-trained on massive amounts of text data. Examples include GPT-2, GPT-3, and recently, GPT-4. Read this article to know more about the differences between GPT-3 and GPT-4.

2. BERT (Bidirectional Encoder Representations from Transformers) models: These are another type of transformer-based language model that is trained using both left-to-right and right-to-left contexts, allowing it to understand the context in which words appear in a sentence. Examples include BERT-base and BERT-large.

3. XLNet: This is a variant of the transformer-based architecture that uses an autoregressive approach to generate sequences, allowing for more accurate predictions.

4. T5 (Text-to-Text Transfer Transformer): This is a language model developed by Google that can perform various natural language processing tasks such as summarization, translation, question answering, and more.

5. RoBERTa (Robustly Optimized BERT Approach): This is a variant of the BERT model that has been optimized for better performance on various natural language understanding tasks.

6. ALBERT (A Lite BERT): This is a smaller version of the BERT model that uses parameter sharing techniques to reduce memory usage and improve training efficiency while maintaining high accuracy.

7. ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately): This is a novel pre-training method for language models that replaces some tokens in input text with plausible alternatives and trains the model to predict whether each token was replaced or not.

Difference between GPT-3 vs GPT-4

As of now, GPT-4 has just been released, so it is not possible to go into a detailed technological comparison of GPT-4 vs GPT-3 yet. However, in terms of performance or capabilities advancements can already be seen. Here are the key changes that we can see in GPT-4 vs GPT-3:

GPT-3

GPT-4

- 175 billion parameters

- Trained on a diverse range of sources including books, articles, and websites

- Supports over 40 different languages

- Can generate coherent text with just a few examples

- Can understand context and generate text that is relevant to the given context

- Has shown impressive accuracy in generating text

- Limited knowledge of events after 2021

- Release: November 2022

- Variations available on the OpenAI Playground and available for commercial use via OpenAI pricing plans

- ca. 1 trillion parameters

- Trained on more diverse and larger datasets including images as well as text

- Outperforms GPT-3 in 24 tested languages

- Better few-shot learning capabilities plus visual input

- Can understand longer context and generate better relevant text

- Improved accuracy especially for high complexity

- Limited knowledge of events after 2021

- Release: March 2023

- Available via ChatGPT Plus subscription and waitlist open access to GPT-4 via OpenAI API

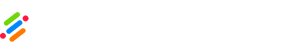

neuroflash as an example for GPT-3 and GPT-4 applications

neuroflash combines both GPT-3 and GPT-4 in many applications like content creation, AI chat, answering questions and much more. Thereby, neuroflash enables its users to have various texts and documents created on the basis of a short briefing. With over 100 different text types, the neuroflash AI can generate texts for any purpose. For example, if you want to create a product description with neuroflash, you only have to briefly describe your product to the AI and the generator does the rest:

With neuroflash, you can tap into your creative potential and unleash your inner storyteller. Whether it’s a short story or an epic novel, this cutting-edge technology will help you craft compelling characters and captivating plots that will keep readers on the edge of their seats.

ChatFlash:

With integrated features like ready to use prompt templates and personalities, ChatFlash offers a more efficient alternative to ChatGPT.

- Templates: Get inspired by the large selection of text templates to get started even faster. Determine what kind of text you want to generate with ChatFlash and get suggestions for a suitable prompt right away.

- Personalities: You specify who you want the magic feather to be. With personalities you can customize the scope of the chat to get even more appropriate and targeted results. The output generated by ChatFlash is closely related to the selected personality and adapts to the context of the conversation.

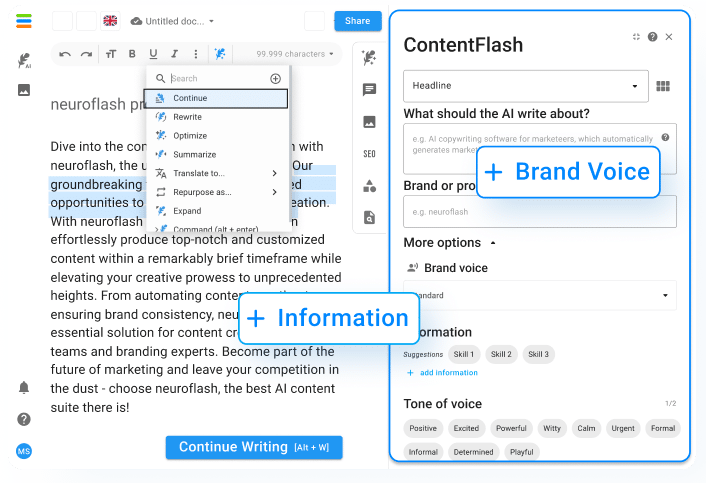

Workflow SEO-optimized blog article:

With our SEO workflow, you can rest assured that every article you produce will be optimized for maximum impact. Say goodbye to the frustration of low engagement rates and hello to a world where your content is king.

Our team of experts are dedicated to staying ahead of the game when it comes to SEO best practices, ensuring that our clients receive only the very best service possible. We understand how important it is for businesses like yours to stay competitive in an ever-changing digital landscape, which is why we’re committed to providing cutting-edge solutions designed specifically with your needs in mind.

The SEO workflow is only available for users on the Pro plan and above (and the old Power & Premium plans). Upgrade your accout now.

While working with the Blog SEO workflow, you can add more elements, as optional, to optimize the result of your Blog article. These are the must-see highliths of the new contentflash SEO workflow:

- Generation based on your keyword input

- Automatic SEO optimization (WDF*IDF)

- Connected to the Internet: Real-time detection of “users also ask” questions and creation of the respective answers.

- Multimedia: Integration of Unsplash images and YouTube videos into your article

- Suggestions of references for backlinks usage

Frequently asked questions

What are large language models in AI?

Advanced artificial intelligence systems that can understand, process and generate natural human-like language. These models use vast amounts of data, including text, images and audio, to learn how human language works. They are designed to process contextual information, so they can understand sentences and paragraphs as a whole, rather than interpreting each individual word independently.

One of the most famous large language models is GPT-3, or Generative Pre-trained Transformer 3, which was developed by OpenAI. It has the ability to generate coherent and natural-sounding text, and can even write essays, stories or poetry that are difficult to distinguish from something a human might write.

These models have potentially transformative implications across a wide range of industries, from content creation to customer service and communication, educational and research. However, there are also ethical concerns around their development and implementation, particularly around issues like biased data and misuse by bad actors.

What is large language models theory?

The most prominent example of large language models is OpenAI’s GPT (Generative Pre-trained Transformer) series, which consists of multiple models trained on billions of words from internet sources. These models have been employed for various natural language processing tasks, including machine translation, sentiment analysis, and text summarization.

The key advantage of large language models is their ability to generate text that sounds natural and human-like, making them valuable tools in language-related industries such as content creation and copywriting. However, some critics argue that the use of large language models may have ethical implications, such as potential biases or the potential to be used for malicious purposes.

What are the major large language models?

There are several major large language models, including GPT-3, BERT, XLNet, and T5.

GPT-3, or Generative Pre-trained Transformer 3, is a natural language processing model developed by OpenAI that uses deep learning to generate human-like responses to text prompts. With 175 billion parameters, GPT-3 is currently one of the largest language models in existence.

BERT, or Bidirectional Encoder Representations from Transformers, is another large-scale language model developed by Google. It is designed to train machine learning systems for natural language processing tasks, including question-answering and language translation.

XLNet, a successor to BERT, was developed by researchers at Carnegie Mellon University and Google. It uses a permutation-based approach to training, allowing it to better handle complex language tasks such as long-term dependencies and syntactic ambiguity.

Finally, T5, or Text-to-Text Transfer Transformer, is a language model developed by Google that can be easily fine-tuned for a variety of natural language processing tasks. It is capable of performing tasks such as summarization, translation, and question-answering, and has been used in applications ranging from chatbots to search engines.

Conclusion

In resume, Large Language Models represent a significant breakthrough in the development of artificial intelligence. As these models continue to improve, they will likely play an increasingly important role in our daily lives – from chatbots that provide customer support to virtual assistants that help us manage our schedules.

These models have significantly improved the performance of various natural language processing applications, including chatbots, language translation, text classification, and sentiment analysis. They have also enabled the development of novel language-based applications that can understand and generate human-like language content with high accuracy and efficiency.