AI Image Generators have become the next big thing on the internet. Join us to this blog in order to discover which one would be the best AI Image generator on the market. Stable Diffusion vs Midjourney vs DALL-E will be compared to study and make the best choice.

Round 1: Midjourney vs Stable Diffusion vs DALLE-2

Founded by David Holz, Midjourney describes themselves as “an independent research lab exploring new mediums of thought and expanding the imaginative powers of the human species”.It is an interactive chat where you can send commands and prompts to an AI using Discord. It will then give you an images based on your text prompts.

Midjourney AI image generation is a relatively new generative model technique used in image generation tasks, such as image synthesis, image super-resolution, and image editing. Rather than generating a final output image with a single input, the model generates a series of intermediate images, which can ultimately refine and enhance the final output image.

This technique involves using a midjourney generator that refines the intermediate images over multiple iterations and the final output image. The midjourney generator acts on top of a coarse generator, which generates the initial random noise vectors using the diffusion process.

More about Midjourney pricing and subscription.

Pros of Midjourney

- Higher quality images: Midjourney AI image generation produces high-quality images by refining intermediate images that progressively enhance the final output image.

- Reduced computational requirements: The midjourney generator uses a smaller model configuration and requires lower computational resources, making it more accessible and less expensive than other generative models.

- More control over the output: The intermediate images generated during the training process provide more control and customization of the output image, which can facilitate the production of a desired output type.

- Enhanced flexibility: Midjourney generators can be used to modify existing images, enabling the creation of unique, custom, and original images with different patterns, textures, colors, or morphologies.

- Better convergence: Midjourney image generation has shown better convergence properties, leading to decreased training times and faster convergence to the target output image, thus resulting in higher throughput.

- More natural-looking images: Midjourney generators produce more realistic and natural-looking images due to the progressive refinement of intermediate images, resulting in better final output results.

Cons of Midjourney

- More complex architecture: The architecture of Midjourney generators can be more complicated than traditional models, and it requires an understanding of the architecture to implement the model effectively.

- Longer Training Time: Midjourney generators can have longer training times overall than traditional generative models.

- The cost of computation may remain high: While the Midjourney generator can be configured to use fewer computational resources, the overall cost of computation can still be considerable.

- Dependency on large datasets: Midjourney imaging involves working with large datasets for training and validation purposes. Thus, Midjourney generators are dependent on the quantity and quality of the input data available.

- Difficulties in optimization: Midjourney image generation optimization can be difficult, making it necessary for the user to have in-depth knowledge and understanding of the architecture to optimize the algorithm performance.

- Limited Applications: So far, Midjourney generators have been most extensively used in AI image generation tasks, which have limits when it comes to other applications for which unknown intermediate states between input and output are not well-defined.

Midjourney vs Stable Diffusion vs DALL-E summary:

The main advantage of the Midjourney is that it can produce high-quality images despite using a smaller model configuration and lower computational resources. Midjourney also offers more flexibility and customization in the training process, offering greater control over the previewing of intermediate images generated for the final output. In turn, Midjourney’s main disadvantages are related to its complexities, data, computation, and optimization requirements. However, as its potential applications expand, its advantages are being leveraged for a wide range of AI image generation applications.

Round 2: Midjourney vs Stable Diffusion vs DALLE-2

The term “stable diffusion” in AI image generation refers to a technique used to generate realistic images using the diffusion process. The diffusion process is a form of generative modeling that involves iteratively applying a diffusion algorithm to an initial random noise vector, leading to a series of updates that result in the eventual generation of a new image.

Stable diffusion is a modification of the standard diffusion-based image generation method that emphasizes producing more stable and higher quality images. As the diffusion process involves a series of stochastic updates, it can lead to image artifacts, inconsistencies, and blurriness in the final output. With stable diffusion, the diffusion algorithm is modified to incorporate a regularizing function that stabilizes update dynamics during the training process, resulting in higher quality and realistic output images.

In summary, stable diffusion is an advanced technique for generating high-quality and stable images using the diffusion process. It is used to improve the effectiveness of AI image generation models, resulting in higher quality and more consistent generated images while reducing image artifacts and inconsistencies.

Views some Stable Diffusion examples.

Pros of Stable Diffusion

-

Higher Quality Images: Stable diffusion improves the quality of generated images by reducing the occurrence of image artifacts and blur, creating more realistic-looking images.

- High Accuracy: Stable diffusion is an excellent technique for generating high accuracy generative models, which helps in producing impressive and realistic images.

- Improved Consistency: Stable diffusion reduces inconsistencies between generated images, leading to a more consistent and polished final output.

- Better Handling of Large Datasets: Stable diffusion is better equipped to handle large datasets, making it an ideal choice for generating images on large-scale projects.

- Faster Training: Stable diffusion requires fewer iterations to converge, making it faster and more efficient for generating high-quality images.

- Greater Stability: The regularizing function incorporated into the stable diffusion algorithm stabilizes the diffusion updates, leading to better convergence for the training process.

Cons of Stable Diffusion

- Increased Complexity: Stable Diffusion is a more complex technique than other traditional models used for image generation, making it more demanding and requiring more computational resources.

- Limited Flexibility: Stable Diffusion requires specific tuning parameters during the training process, which can limit the flexibility of the model and its adaptability to different types of input data.

- Requires Large Datasets: Stable Diffusion requires large datasets for effective training, resulting in significant data preprocessing and curation, which can be expensive and time-consuming.

- Possibility of Overfitting: Stable Diffusion models may overfit to the training data, resulting in reduced performance in generating new and unseen images.

- Computational Resource Requirements: Stable Diffusion requires more computational resources, such as high-end GPUs, due to its complex architecture and the need for large amounts of training data.

- Longer Training Times: Stable Diffusion trains slower than traditional image generation methods due to its complex architecture, which can lead to longer training times.

Midjourney vs Stable Diffusion vs DALL-E summary:

In summary, Stable Diffusion has advantages and disadvantages. Several advantages include: producing higher quality images with greater stability, faster training and handling of large datasets, improved consistency, and high accuracy. These benefits make it a valuable technique for improving AI image generation models. However, while Stable Diffusion can improve the quality of generated images, it requires careful tuning, large datasets, and more powerful computational resources. Thus, practitioners must weigh and balance these factors while considering using Stable Diffusion or more traditional techniques while generating AI images.

Round 3: Midjourney vs Stable Diffusion vs DALLE-2

DALL-E 2 refers to an improved version of DALL-E, an AI language model developed by OpenAI in January 2021. DALL-E is a generative image-based application using the transformer architecture with the capacity to create photorealistic images given natural language text descriptions.

DALL-E 2 has enhanced capability compared to its predecessor model, with the ability to perform advanced image manipulations, such as moving, rotating, and flipping objects within the image. DALL-E 2 generates new images based on the text inputs, having better natural text understanding and a broader range of vocabularies for image description.

DALL-E 2 works by taking in natural language text as input and uses the transformers within the generative model to produce corresponding output images. The model visualizes the natural language descriptions via masking, an image pixel detection method involving modifying the design image to match the sentence input.

Receive detailed information on DALL-E 2.

Pros of DALL-E 2

- Improved Natural Language Understanding: DALL-E 2 is an improved version of DALL-E, with better natural language understanding capabilities based on the transformer architecture, making it ideal for generating high-quality images from text inputs.

- Advanced Image Manipulation: DALL-E 2 can perform advanced image manipulations such as moving, rotating, and flipping objects in the image, providing greater flexibility and customization of output visualizations.

- Image Customization: The DALL-E 2 model can create highly customized outputs, such as customizing patterns, designs, and shapes for objects, reflecting diverse user preferences and user-specific design use cases.

- Consistency of Output: DALL-E 2 generates consistent image outputs from a given natural language input, making it ideal for generating repeatable marketing or advertising material for businesses.

- Bi-Directional Generation: DALL-E 2 can produce images for inputs and descriptions of images, and conversely texts for a given image, thus enabling bidirectional generation.

- Extensive Vocabulary: DALL-E 2 has an extensive vocabulary suitable for generating images from a broad range of topics and descriptions, leading to greater versatility in generative applications.

Cons of DALL-E 2

- Limited Realism: Although DALL-E 2 generates high-quality images, there may be a limit to how realistic those images are when compared to real-life images. This limitation can constrain the application of constructed Dall-E images for detailed pronouncements.

- Resource Management: DALL-E 2 requires advanced and more powerful computational resources to generate highly complex images, making it less accessible to individuals and businesses without the necessary resources to sustain it.

- Text Input Requirement: The image generation output by DALL-E 2 is reliant on the quality of the natural language input, limiting the scope of the image generation to getting a proper text description only.

- Quality of Input Text: DALL-E 2 requires not only adequate text input but has to be detailed and well-structured to produce highly accurate and quality output images.

- Limited Applications: As DALL-E 2 is specifically programmed for generating photorealistic images from text descriptions, its scope of application is limited to specific use-cases like image or video production, marketing, and advertising, where complex, customized, and realistic images can have significant applications.

Midjourney vs Stable Diffusion vs DALL-E summary:

Overall, DALL-E 2 has clear advantages, including improved natural language understanding, advanced customization, consistency of output, bi-directional generation, and extensive vocabulary. These benefits make DALL-E 2 an ideal tool for designers, advertisers, and creatives looking to generate high-quality images from text descriptions and tailor designs to specific user needs. In turn, some disadvantages include the requirement of more powerful resources, the dependence on the quality and structure of the input text, the management of already created images, the limited scope of applications, and the limited realism potential.

Midjourney vs Stable Diffusion vs DALL-E 2: Who is the Winner?

It is difficult to declare an outright “best technique” between Midjourney vs Stable Diffusion vs DALL-E 2 as their use cases can vary based on specific applications, data availability, computational resources, and expected output quality.

- Midjourney is useful in customizing output and providing more control over the generation process. It is computationally efficient and improves convergence times while producing more natural-looking images. Midjourney image generation is particularly useful in generating high-quality images while using limited computational resources, making it an excellent choice in design and video-based applications.

- Stable Diffusion, on the other hand, is useful in generating high-quality and stable images while reducing inconsistencies and artifacts in the images. The regularization function incorporated in Stable Diffusion stabilizes update dynamics during the training process, leading to higher final output quality. Stable Diffusion is well suited for generating AI images in healthcare, food, inventory management, supply chains or environmental sustainability applications.

- DALL-E 2 specializes in the generation of photorealistic images from natural language text, providing advanced image manipulation and customization capabilities with extensive vocabulary. DALL-E 2 is an excellent choice where customized images, adherence to specific market/industry standards, and real-life like images potentially have high orientation.

While all three techniques have their advantages and limitations, practitioners are expected to select based on their data nature, computational resources, specific applications, outputs’ desired quality, training time, and the technical know-how required to use the techniques effectively.

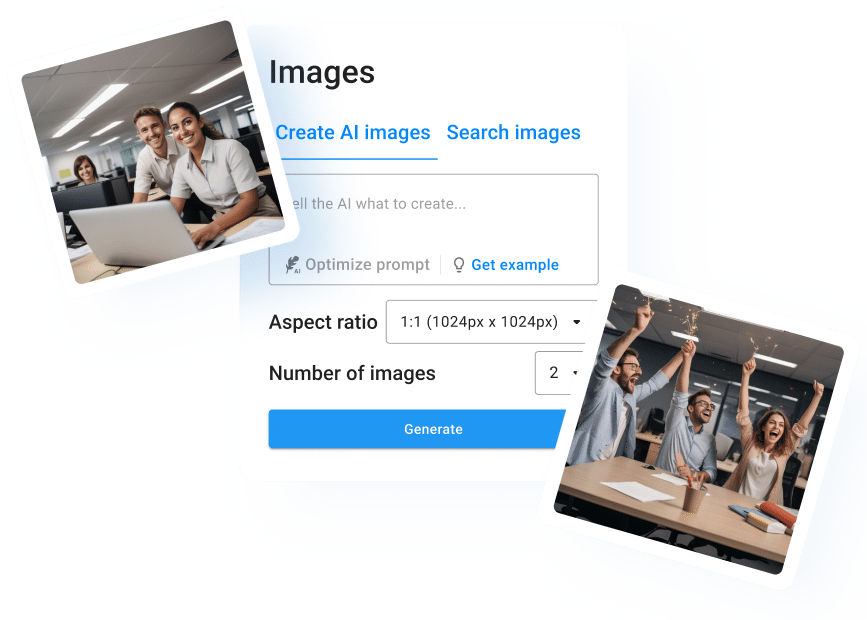

Want to try AI image generation for free? Then use ImageFlash!

Next to those three, there are plenty of other AI image generators on the market, and one of them is ImageFlash, which actually works with a version of one of the Stable Diffusion-models. So, if you are looking for an AI image generator that you can use without a subscription and for free, then look no further! With ImageFlash you can create AI-generated photos with a simple description completely for free. Here is how it works:

Envision the image in your mind and communicate your vision to the neuroflash image generator in a short sentence. With the aid of our magic pen tool, you don’t even have to think about how to further optimize your prompt. neuroflash can effortlessly and automatically optimize your prompt for you and help you achieve even better results:

Choose how many images you want the AI to generate for you. You can select up to four images. Afterwards, neuroflash processes your prompt and creates matching results. You can save, share or otherwise use your images. All images generated by the AI are completely copyright free!

So what are you waiting for? Turn your creativity and imagination into unique, royalty-free images for free and with no subscription!

AI Images generated by ImageFlash

With ImageFlash, you’re able to generate all the images you’re envisioning in the spawn of only a few seconds; so make sure to use a detailed description (prompt) for the best results. Try it out yourself now.

Frequently asked questions:

Is DALL-E 2 better than Stable Diffusion?

DALL-E 2 and Stable Diffusion serve different purposes in AI image generation. Stable Diffusion is a technique that emphasizes stability and the reduction of artifacts during the image generation process, while DALL-E 2 is an AI language model for generating photorealistic images from natural language text.

Comparing the two techniques directly isn’t straightforward as they tackle specific use cases in generating images. They both have advantages and disadvantages, with DALL-E 2 having a more significant focus on customization capability, advanced image manipulation, and the ability to use natural language text as input, while Stable Diffusion focuses on generating high-quality and stable images with fewer artifacts.

Is Midjourney or Stable Diffusion better?

Midjourney and Stable Diffusion are both generative models that are useful in AI image generation, but they are utilized for different purposes. Stable Diffusion is focused on generating high-quality and stable images while reducing artifacts, while Midjourney is designed to provide more control and customization over the image generation process.

As such, there is no straightforward “better” technique between Midjourney and Stable Diffusion as their functional purposes differ. Midjourney image generation is more efficient with computational power, requires less data to generate high-quality outputs, and enables customization of image generation output. Stable Diffusion, on the other hand, produces stable and high-quality images by applying regularization functions to the diffusion updates, facilitating a higher-quality output.

What is the difference between DALL-E 2 and Midjourney?

DALL-E 2 is an AI language model that generates photorealistic images from natural language text descriptions; its generative capabilities focus on creating highly customized and realistic images with natural language input. DALL-E 2 focuses intimately on the language aspect and relies on extensive vocabulary to generate high-quality outputs in compliance with text input requirements.

Midjourney, on the other hand, is used to provide intermediate images during the image generation process in AI image generation tasks. Its particular advantage comes in the level of control one has over the image generation process. Midjourney enables efficient customization of generated images, with intermediate images progressively enhancing the final output simultaneously put together.

In summary, the primary difference between DALL-E 2 and Midjourney is the technique used to generate images. DALL-E 2 simulations rely on natural language text descriptions, while Midjourney generates intermediate images to increase control and customization options during image production. Consequently, they are useful for different use cases, with DALL-E 2 being effective in producing photorealistic images from natural language, and Midjourney being used when more control over the image generation process is necessary.

Is Midjourney based on Stable Diffusion?

Midjourney and Stable Diffusion are two different generative modeling techniques.

Midjourney is a technique that generates intermediate images during the image generation process while providing more control and customization options within the image generating process. Midjourney models can function on top of the stable diffusion process, using diffusion models to generate intermediate images. However, Midjourney can also be used in conjunction with other generative models.

Stable Diffusion, on the other hand, is a generative modeling technique that produces high-quality, stable images while reducing artifacts during the creation process. Stable Diffusion models use a diffusion process that gradually adds Gaussian noise to an image and then removes it, allowing the model to generate complex distributions. Because intermediate images are not created during the image generation process in Stable Diffusion, the technique does not directly affect Midjourney modeling.

What is the future of AI?

Definitely, there are many reasons to be optimistic about the future of AI. First, the rapid pace of progress in AI technology is showing no signs of slowing down. Second, AI is increasingly being operated in a variety of industries and applications, which is only increasing its impact on our lives. Finally, the potential for AI to positively impact humanity is immense. Then, what can we expect from AI in the future? Read more about it here.

How does AI Image generator works?

As previously noted, Artificial Intelligence image generators are computer programs that create new images. To create an AI image generator, one must first design a “generative model” of how images are created, which is based on a set of rules defining how the basic elements of an image can be combined. After the generative model is complete, it can generate new images. There are two main types of AI-image generators: those that produce “photorealistic” images, and those that create “abstract” or “stylized” ones.

How can I generate AI-images?

With no doubts, our top recommendation related with the best AI Images generator will be neuroflash.

But don’t just take our word for it – try out neuroflash today and see the results for yourself! Whether you’re a professional designer or an amateur hobbyist, we guarantee that this powerful tool will revolutionize the way you approach image generation forevermore.

Conclusion

In summary, between Midjourney vs Stable Diffusion vs DALL-E 2, it’s difficult to determine which is the best AI Image generator. Undoubtedly, all of them offer different advantages and disadvantages to the table. In the end, each person decides for themselves which generator works for them the most. Otherwise, if you can’t decide between the three, you can always try out neuroflash‘s own image generator to create outstanding photos.