What is Stable Diffusion?

Stable Diffusion, also known as diffusion models, is a type of artificial intelligence (AI) model that can generate new data, such as images or text, by gradually transforming an initial input. Stable Diffusion as a technique is used to improve the stability and convergence of image generation models. It involves adding noise to the image in a structured and controlled manner during the generation process, to ensure that the model doesn’t overfit the training data and produces diverse and realistic images.

Stable Diffusion is used in several state-of-the-art image generation models, such as Diffusion Probabilistic Models (DPMs), to improve the quality and diversity of the generated images. The technique essentially adds a controlled amount of randomness to the image generation process, which allows for the model to generate more varied and interesting images while maintaining stability.

The goal of Stable Diffusion is to produce high-quality and realistic outputs that resemble the training data.

Stable Diffusion examples: How do they work?

To understand Stable Diffusion, let’s take the example of generating an image. The process involves the following steps:

- Starting Point: You begin with an initial image, which can be a random noise or a partially completed image.

- Iterative Transformation: The image goes through a series of transformations, with each iteration refining and improving it. These transformations can include adjustments to colors, shapes, textures, and other visual elements.

- Gradual Improvement: As the iterations progress, the generated image gradually becomes more recognizable and closer to the desired output. The AI model learns from the patterns and structures present in the training data to guide the transformation process.

- Quality Assessment: Throughout the iterations, the AI model evaluates the quality of the generated image. It compares the generated image to the training data and assesses how realistic and similar it is to real images. This assessment helps guide the subsequent transformations to enhance the image further.

- Convergence: The iterations continue until the generated image reaches a point where it is considered to be of high quality and resembles the training data. The result is a generated image that can be visually appealing and indistinguishable from real images.

Stable Diffusion models differ from traditional generative models by gradually transforming the input data, allowing for more controlled and stable generation of realistic outputs. This approach helps address some of the challenges faced by earlier models, such as generating blurry or unstable images.

Overall, Stable Diffusion provides a way to generate high-quality and visually appealing data by iteratively refining and improving an initial input, resulting in outputs that closely resemble the training data.

Stable Diffusion Examples: 6 AI-generated categories

Since coming up with the perfect image prompts can be a bit difficult for some people, Stable Diffusion offers a huge database where they listed over 9 million prompts for you to use. For these examples, prompts about topics such as universe, magic and fantasy have been used. Let’s take a look.

Space

Prompt: astronaut looking at a nebula , digital art , trending on artstation , hyperdetailed , matte painting , CGSociety

Universe

Prompt: Entering the Fifth Dimension. Photorealistic. Masterpiece.

Magic

Prompt: modern street magician holding playing cards, realistic, modern, intricate, elegant, highly detailed, digital painting, artstation, concept art, addiction, chains, smooth, sharp focus, illustration, art by ilja repin

Fantasy

Prompt: Hedgehog magus, gaia, nature, fairy, forest background, magic the gathering artwork, D&D, fantasy, cinematic lighting, centered, symmetrical, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, volumetric lighting, epic Composition, 8k, art by Akihiko Yoshida and Greg Rutkowski and Craig Mullins, oil painting, cgsociety

Stable diffusion examples with neuroflash

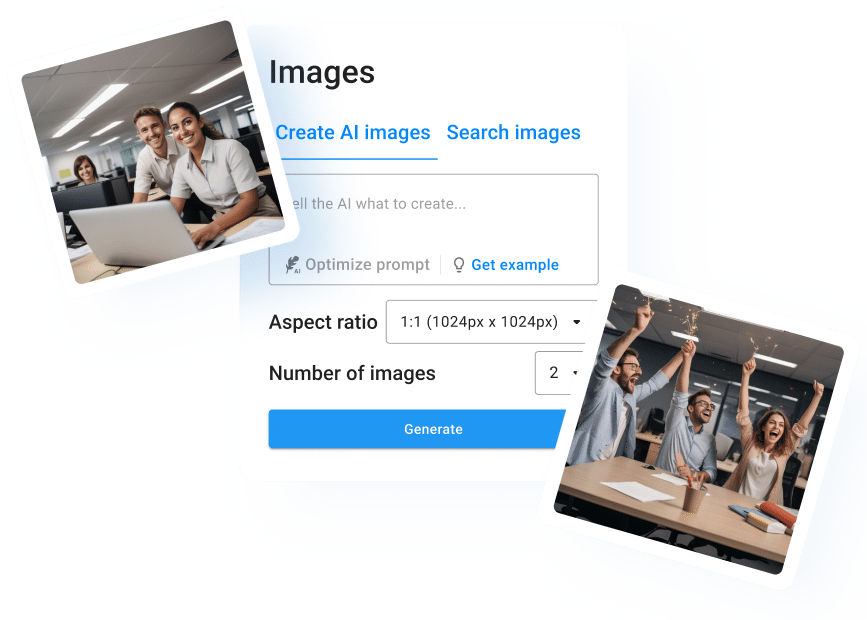

If you want to try out Stable Diffusion with the help of an AI image generator, then look no further! With the neuroflash image generator, you can create AI-generated images completely for free. Here is how it works:

Envision the image in your mind and communicate your vision to the neuroflash image generator in a short sentence. With the aid of our magic pen tool, you don’t even have to think about how to further optimize your prompt. neuroflash can effortlessly and automatically optimize your prompt for you and help you achieve even better results:

Choose how many images you want the AI to generate for you. You can select up to four images. Afterwards, neuroflash processes your prompt and creates matching results. You can save, share or otherwise use your images. All images generated by the AI are completely copyright free!

So what are you waiting for? Turn your creativity and imagination into unique, royalty-free images for free and with no subscription!

Let’s see how the image generator by neuroflash works under the same stable diffusion text prompts:

Space - stable diffusion example with neuroflash

Prompt: astronaut looking at a nebula , digital art , trending on artstation , hyperdetailed , matte painting , CGSociety

Universe - stable diffusion example with neuroflash

Prompt: Entering the Fifth Dimension. Photorealistic. Masterpiece.

Magic - stable diffusion example with neuroflash

Prompt: modern street magician holding playing cards, realistic, modern, intricate, elegant, highly detailed, digital painting, artstation, concept art, addiction, chains, smooth, sharp focus, illustration, art by ilja repin

Fantasy - stable diffusion example with neuroflash

Prompt: Hedgehog magus, gaia, nature, fairy, forest background, magic the gathering artwork, D&D, fantasy, cinematic lighting, centered, symmetrical, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, volumetric lighting, epic Composition, 8k, art by Akihiko Yoshida and Greg Rutkowski and Craig Mullins, oil painting, cgsociety

Frequently asked questions

What is Stable Diffusion?

In AI image generation, “stable diffusion” is a technique that involves gradually adding noise or randomness to an image during generation, with the goal of producing diverse and realistic outputs. This technique uses the concept of diffusion, where information is gradually spread out and blended in. The generated image is deformed more and more by the noise added, until the noise is reduced to zero, and a final image is produced.

This process of adding, blending, and reducing noise is done repetitively to improve the stability and convergence of the image-generation models and to produce high-quality images. Stable diffusion is used in many state-of-the-art image generation models, such as Diffusion Probabilistic Models (DPMs). The technique essentially adds a controlled amount of randomness to the image generation process, which allows for the model to generate more varied and interesting images while maintaining stability.

What are the types of Stable Diffusion?

There are different types of Stable Diffusion techniques used in AI image generation. A few popular ones are:

- Langevin Diffusion: This technique, also known as stochastic gradient Langevin dynamics, involves adding random noise to the image using stochastic gradient descent. The noise gradually reduces over time, and the image converges to the final output.

- Fokker-Planck Diffusion: This is a continuous-time diffusion process that uses a drift function and a diffusion matrix to add noise to the image. The diffusion is controlled by the values of these parameters, and the final output converges to the image without any noise.

- Markov Chain Monte Carlo: This technique involves simulating a Markov chain to sample from a probability distribution and adding noise to the samples. Over time, the noise reduces, and the final image is produced.

- Adversarial Diffusion: This method involves using two neural networks, one to generate images by adding noise and one to discriminate between real and generated images. The noise generator gradually reduces the amount of noise added to the image until the discriminator can no longer differentiate between real and generated images.

What applications does Stable Diffusion have?

Stable Diffusion techniques have several possible applications in marketing and content creation. Some possible scenarios include:

- Content and Image Generation: Stable Diffusion techniques can help generate high-quality and diverse image content in various domains relevant to marketing, such as e-commerce, social media, or advertising.

- Creative Editing and Design: Stable Diffusion techniques can be used to edit and enhance images, such as adjusting color, contrast, or brightness, to create more engaging and impactful visuals.

- Personalization and Recommendation: Stable Diffusion can be used to generate personalized visuals or product recommendations, based on the user’s preferences or history.

- Style Transfer: Stable diffusion can be used to apply the style of one image or brand to another, such as recreating a vintage or retro look for a new product or campaign.

- Interactive Experiences: Stable Diffusion techniques can help create interactive and engaging visual experiences, such as augmented or virtual reality, that allow users to explore and interact with digital content in new ways.

These are some of the possible applications of Stable Diffusion techniques in marketing and content creation, as they allow for more efficient, creative, and impactful generation and editing of image content.

What is the latest Stable Diffusion?

The latest Stable Diffusion methods for AI image generation are still an active area of research, and new methods are being developed and proposed regularly. One of the state-of-the-art Stable Diffusion methods is the Diffusion Probabilistic Models (DPMs), which were proposed in a research paper by Grathwohl et al. in 2021. DPMs are a type of generative model that use an iterative diffusion process to generate high-quality and diverse images.

DPMs perform stochastic sampling based on partially learned diffusion Monte Carlo, resulting in faster and more flexible implementation. The experimental results achieved using DPMs have shown significant improvements in the image-generation quality and diversity, compared to other state-of-the-art generative models. However, it’s important to note that Stable Diffusion is a rapidly evolving field of research, and new techniques may emerge frequently.

Will Stable Diffusion be able to replace humans?

Useful tips on creating your own Stable Diffusion examples

Useful tips on Stable Diffusion examples text prompts

- A person walking through a field of tall grass

- A close-up of a flower

- A bird’s-eye view of a cityscape

- A sunset over the ocean

- A child playing with a toy