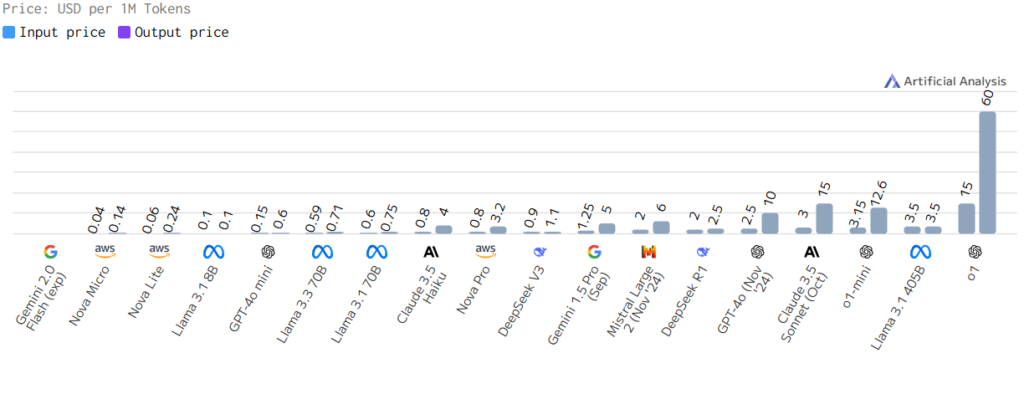

Whether you’re building a cutting-edge chatbot, automating complex tasks, or developing creative content, these AI tools have a lot to offer. However, understanding the cost of using these models is crucial. The Gemini family includes various models tailored for different needs, each with its own performance capabilities and, consequently, different pricing structures. This article will break down the pricing structures of different Gemini models, helping you understand how to choose the right model based on your needs and budget.

The Basics of AI Model Pricing

Before diving into specific Gemini model costs, it’s important to understand some fundamental concepts of AI model pricing. The primary method of charging for AI models like Gemini is through tokens.

Tokens and Their Importance: Tokens are essentially parts of words or characters. When you send text or other data to an AI model, it’s broken down into tokens, and you are charged based on the number of tokens processed. As a rough estimate, 1000 tokens is equivalent to about 750 words, but this can fluctuate depending on the complexity of the text.

Input vs Output Tokens: You are charged for both the number of input tokens you send to the model and the output tokens it generates. For example, if you send a long paragraph to the AI, you are charged for the number of tokens in that paragraph. Similarly, if the AI generates a summary in response, you are also charged for the tokens in that summary.

Pay-as-you-go Model: Most Gemini models operate on a pay-as-you-go pricing model. This means that you’re charged only for the actual amount of the AI model that you use.

Factors Affecting Cost: Aside from input and output tokens, the overall cost is affected by:

Model Complexity: More advanced models with enhanced capabilities may come at a higher price.

Usage Patterns: Consistent high usage will, naturally, lead to higher costs.

Specific Features: Some specific features or more complex functionalities may have additional cost associated with them.

Gemini Model Pricing Breakdown

Let’s dive into specific pricing details of the available Gemini models:

1. Gemini 1.5 Flash:

Focus: This model is designed specifically for speed and low latency and is now generally available for production use. It excels at performing diverse, repetitive tasks quickly and is notable for its 1 million token context window, allowing it to handle large inputs.

Pricing: Gemini 1.5 Flash is offered free of charge within the Gemini API’s “free tier”, which comes with certain rate limits. Additionally, Google AI Studio usage remains completely free in all available countries for this model.

Input Tokens: Free of charge.

Output Tokens: Free of charge.

Rate Limits (Free Tier):

15 RPM (requests per minute)

1 million TPM (tokens per minute)

1,500 RPD (requests per day)

Context Caching: Free of charge, up to 1 million tokens of storage per hour.

Pay-as-you-go: Scale your AI service with confidence using the Gemini API pay-as-you-go billing service. Set up billing easily in Google AI Studio by clicking on “Get API key”. The following prices apply:

Rate Limits (Pay-as-you-go):

2,000 RPM (requests per minute)

4 million TPM (tokens per minute)

Prompts up to 128k tokens

Prompts Up to 128k Tokens:

Input Tokens: $0.075 per 1 million tokens

Output Tokens: $0.30 per 1 million tokens

Context Caching: $0.01875 per 1 million tokens

Prompts Longer than 128k Tokens:

Input Tokens: $0.15 per 1 million tokens

Output Tokens: $0.60 per 1 million tokens

Context Caching: $0.0375 per 1 million tokens

Context Caching (storage): $1.00 per 1 million tokens per hour

Tuning Price: Input/output prices are the same for tuned models. Tuning service is free of charge.

Grounding with Google Search: $35 per 1,000 grounding requests (for up to 5,000 requests per day).

Used to Improve our Products: No

Best Use Cases: This model is ideal for high-volume production environments that require fast processing for repetitive tasks, such as real-time chatbots, instant language translation, mobile applications, content summarization, and automation, and can scale according to your requirements by using its pay-as-you-go options. Its large context window makes it suitable for processing extensive documents and large text inputs.

2. Gemini 1.5 Flash Pro:

Focus: This model is designed to offer a robust balance of performance and capabilities for a variety of use cases. It aims to provide high-quality AI results while also being efficient in terms of resource utilization.

Pricing: Gemini 1.5 Pro is currently offered free of charge within the Gemini API’s “free tier”. This tier provides lower rate limits for testing and experimentation. Additionally, the Google AI Studio usage is completely free in all available countries for both models.

Input Tokens: Free of charge.

Output Tokens: Free of charge.

Rate Limits (Free Tier):

2 RPM (requests per minute)

32,000 TPM (tokens per minute)

50 RPD (requests per day)

Pay-as-you-go: Scale your AI service with confidence using the Gemini API pay-as-you-go billing service. Set up billing easily in Google AI Studio by clicking on “Get API key”. The following prices apply:

Rate Limits (Pay-as-you-go):

1,000 RPM (requests per minute)

4 million TPM (tokens per minute)

Prompts up to 128k tokens

Prompts Up to 128k Tokens:

* Input Tokens: $1.25 per 1 million tokens

* Output Tokens: $5.00 per 1 million tokens

* Context Caching: $0.3125 per 1 million tokens

Prompts Longer than 128k Tokens:

Input Tokens: $2.50 per 1 million tokens

Output Tokens: $10.00 per 1 million tokens

Context Caching: $0.625 per 1 million tokens

* Context Caching (storage): $4.50 per 1 million tokens per hour

Tuning Price: Not available.

Grounding with Google Search: $35 per 1,000 grounding requests (for up to 5,000 requests per day).

Used to Improve our Products: No

Best Use Cases: This model is primarily intended for testing, exploring its capabilities, and developing initial AI-powered applications. Due to its rate limits in the free tier, it may not be suitable for high-volume, production-level applications at this time. It is designed for testing and development of more complex AI tasks, content creation, exploring complex reasoning tasks, analyzing smaller datasets, or for educational or exploration projects, but it is not designed to handle large volumes of requests due to its intended use in testing and developing.

3. Gemini 2.0 Flash:

Both input and output tokens are provided at no cost ($0.00 per million tokens), and this pricing remains consistent whether you’re working with small prompts under 128K tokens or larger contexts exceeding 128K tokens. This represents a significant departure from traditional AI model pricing structures, which typically charge based on token volume and context window size.

The free pricing model enables developers and organizations to extensively test and experiment with the model’s capabilities without budget constraints, though it’s worth noting that this pricing structure may be subject to change once the model moves beyond its experimental status. This approach democratizes access to advanced AI capabilities and encourages innovation across various use cases, from simple queries to complex, large-context applications.

Gemini Models Pricing and Features Comparison

Model Focus & Availability

| Feature | Gemini Flash 1.5 | Gemini Flash Pro | Gemini 2.0 |

|---|---|---|---|

| Primary Focus | Speed and low latency | Balance of performance and capabilities | Latest experimental features |

| Availability | Generally available for production | Testing purposes only | Experimental preview |

| Context Window | 1 million tokens | Not specified | Not specified |

Free Tier Rate Limits

| Limit Type | Gemini Flash 1.5 | Gemini Flash Pro | Gemini 2.0 |

|---|---|---|---|

| Requests per Minute (RPM) | 15 | 2 | N/A |

| Tokens per Minute (TPM) | 1 million | 32,000 | N/A |

| Requests per Day (RPD) | 1,500 | 50 | N/A |

Pay-as-you-go Rate Limits

| Limit Type | Gemini Flash 1.5 | Gemini Flash Pro | Gemini 2.0 |

|---|---|---|---|

| Requests per Minute (RPM) | 2,000 | 1,000 | N/A |

| Tokens per Minute (TPM) | 4 million | 4 million | N/A |

| Maximum Prompt Size | 128k tokens | 128k tokens | N/A |

Pricing for Prompts ≤ 128k Tokens

| Price Category | Gemini Flash 1.5 | Gemini Flash Pro | Gemini 2.0 |

|---|---|---|---|

| Input Tokens (per 1M) | $0.075 | $1.25 | $0.00 |

| Output Tokens (per 1M) | $0.30 | $5.00 | $0.00 |

| Context Caching (per 1M) | $0.01875 | $0.3125 | N/A |

Pricing for Prompts > 128k Tokens

| Price Category | Gemini Flash 1.5 | Gemini Flash Pro | Gemini 2.0 |

|---|---|---|---|

| Input Tokens (per 1M) | $0.15 | $2.50 | $0.00 |

| Output Tokens (per 1M) | $0.60 | $10.00 | $0.00 |

| Context Caching (per 1M) | $0.0375 | $0.625 | N/A |

Additional Features & Pricing

| Feature | Gemini Flash 1.5 | Gemini Flash Pro | Gemini 2.0 |

|---|---|---|---|

| Context Caching Storage (per 1M tokens/hour) | $1.00 | $4.50 | N/A |

| Model Tuning | Free | Not Available | N/A |

| Google Search Grounding (per 1k requests) | $35 | $35 | N/A |

| Used to Improve Products | No | No | N/A |

Best Use Cases

| Model | Recommended Applications |

|---|---|

| Gemini Flash 1.5 |

|

| Gemini Flash Pro |

|

| Gemini 2.0 |

|

Final Thoughts:

In conclusion, Gemini 2.0’s groundbreaking free pricing for input and output tokens marks a significant shift in AI accessibility, empowering experimentation and innovation without cost barriers. While Gemini 1.5 Flash and Pro remain powerful options with tiered pricing for specific needs, Gemini 2.0 opens the door to a new era of AI exploration. As always, consult official Google resources for the latest details.