The artificial intelligence landscape is rapidly evolving, with new players and groundbreaking technologies emerging at a dizzying pace. While OpenAI has long held a prominent position with its groundbreaking models, a new contender has entered the arena: DeepSeek. This Chinese AI startup is making waves with its open-source approach and rapid advancements, creating a dynamic that is reshaping the future of AI.

DeepSeek: The Open-Source Challenger

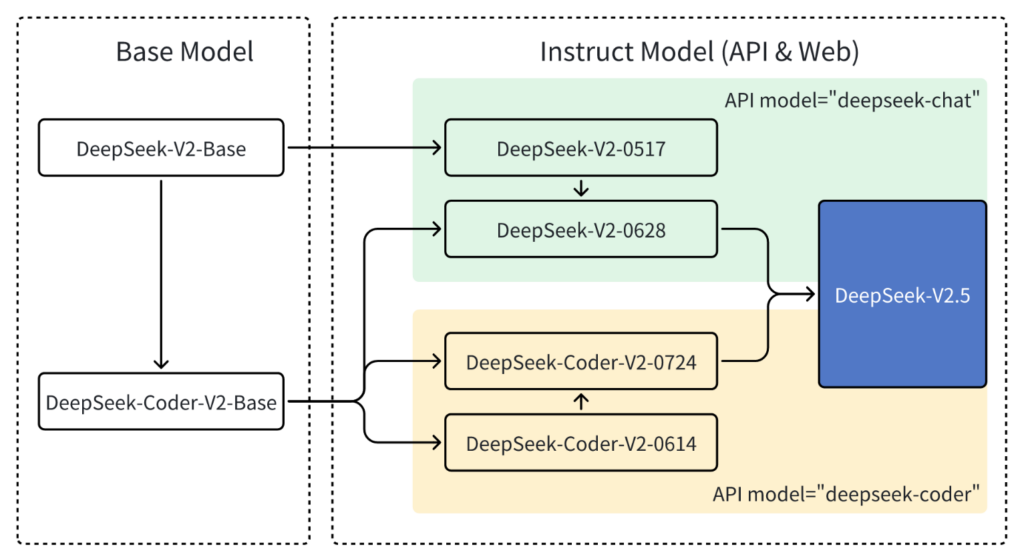

DeepSeek is a Chinese artificial intelligence company that has rapidly gained attention for its development of powerful, open-source large language models (LLMs). It was founded in 2023 by Liang Wenfeng and is funded by the Chinese hedge fund High-Flyer. They have released several models, with DeepSeek-R1 and DeepSeek-V3 being among the most notable.

DeepSeek, founded in 2023, is a Chinese AI company that has quickly become a force to be reckoned with. Unlike OpenAI’s proprietary model, DeepSeek’s approach is centered around open-source large language models (LLMs). This means their code is freely available for anyone to use, modify, and learn from. This is a major differentiator, and it has allowed DeepSeek to gain significant traction in the AI community.

Their focus isn’t just on accessibility, though. DeepSeek has demonstrated impressive results in developing highly performant models, particularly in advanced reasoning tasks like mathematical problem-solving and logical inference. They’ve also shown the ability to train their models with impressive efficiency, using less advanced hardware and significantly lower costs than competitors. This is changing the narrative of who can develop powerful AI, and how it can be done.

Key Characteristics of DeepSeek:

Open-Source Approach: DeepSeek’s models are primarily open-source, meaning their code is freely available for use, modification, and viewing, often under the MIT license. This allows researchers and developers to build upon their work. This is a big differentiator from many US companies like OpenAI that are taking a more closed-source approach.

Cost-Effective Training: DeepSeek has demonstrated the ability to achieve high performance with significantly fewer resources than competitors. They reportedly trained the DeepSeek-R1 model using less advanced chips and at a fraction of the cost of comparable models from companies like OpenAI. This suggests that they are very efficient in their resource usage.

Reasoning Capabilities: DeepSeek’s models, particularly the R1 model, are designed with a focus on advanced reasoning tasks, such as mathematical problem-solving and logical inference. This is what sets them apart from typical language models.

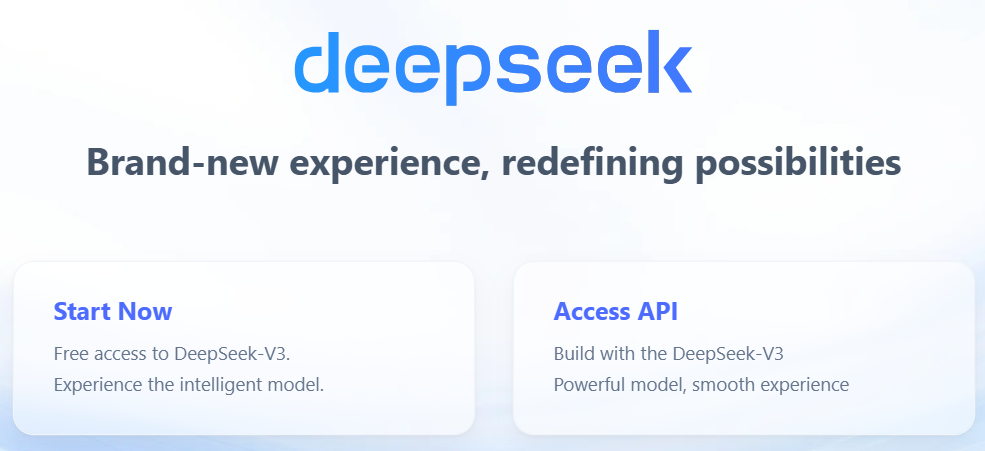

Performance: DeepSeek models are achieving performance levels that rival or surpass those of leading models from OpenAI and Google on certain benchmarks. Read more here.

Rapid Growth: The company has quickly risen in prominence, with its apps reaching the top of app store charts and sparking significant discussions in the tech world.

Model Architecture

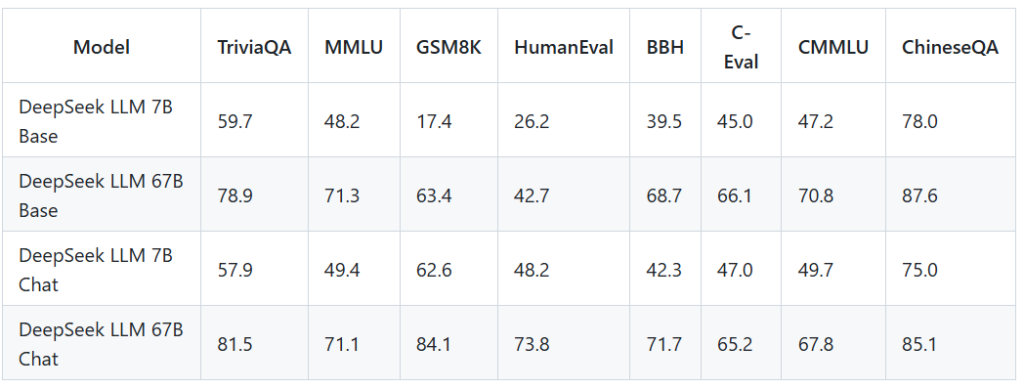

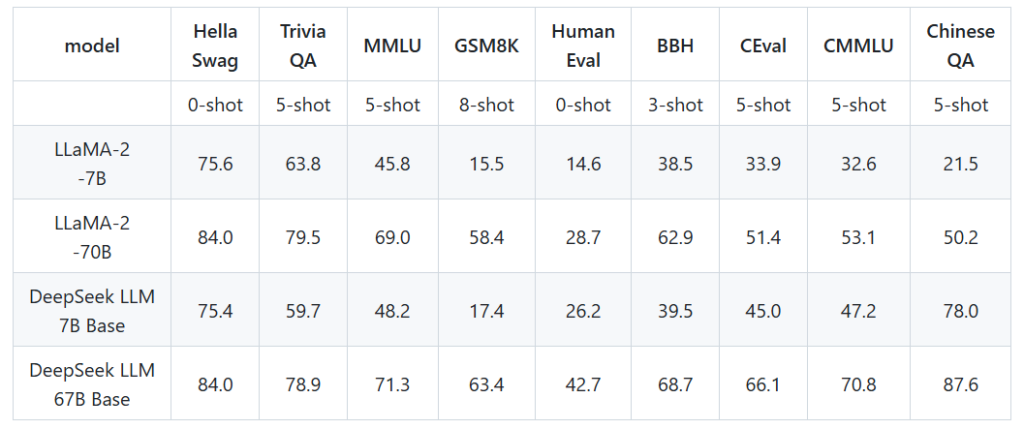

The architecture of DeepSeek LLM is based on an auto-regressive transformer decoder model, similar to LLaMA. The 7B model utilizes Multi-Head Attention (MHA), while the 67B model employs Grouped-Query Attention (GQA) to enhance computational efficiency. Both models support a sequence length of up to 4096 tokens, allowing them to handle extensive context in textual data.

Training Process

Pre-training: The models are pre-trained on a massive dataset of 2 trillion tokens in both English and Chinese. This stage helps the model learn general language patterns and knowledge.

The training uses the AdamW optimizer with specific hyperparameters: β1 = 0.9, β2 = 0.95, and a weight decay of 0.1. The learning rate is adjusted using a multi-step scheduler.

The 7B model used a batch size of 2304 and a learning rate of 4.2e-4, while the 67B model used a batch size of 4608.

Supervised Fine-Tuning (SFT): After pre-training, the models undergo supervised fine-tuning using instruction-response pairs. DeepSeek collected around 1.5 million instruction data examples in English and Chinese to improve helpfulness in tasks like coding and math.

Notably, they experimented with adding 20 million Chinese multiple-choice questions during the SFT phase, significantly boosting the model’s performance on multiple-choice tasks.

Reinforcement Learning (RL): To further improve performance, DeepSeek uses reinforcement learning.

They use a method called Group Relative Policy Optimization (GRPO), which is developed in-house.

GRPO works by sampling a group of outputs (reasoning process and answer) for an input and training the model to generate preferred options based on predefined rules.

Rewards are given based on accuracy and other factors.

DeepSeek-R1’s training includes four distinct stages: initial supervised fine-tuning, reinforcement learning focused on reasoning, new training data collection using rejection sampling, and final reinforcement learning across various tasks. Rejection sampling and supervised fine-tuning is used to generate a large amount of samples, and a reward model is used to filter for the best ones.

Parameter Optimization

A notable technical innovation in DeepSeek’s approach involves parameter precision optimization. Traditional models typically utilize 32-bit precision for representing neural network connections. DeepSeek has successfully implemented an 8-bit precision system, dramatically reducing both memory and computational requirements during the training phase. While such precision reduction typically risks accuracy degradation, DeepSeek has developed advanced techniques to maintain performance quality while benefiting from reduced resource requirements.

Enhanced Token Prediction

DeepSeek has also revolutionized the token prediction process. Traditional language models generate responses sequentially, producing one token at a time. DeepSeek’s innovation enables the simultaneous prediction of multiple consecutive tokens, yielding two significant advantages: improved inference quality and reduced computational overhead in generating complete responses.

Implications for the Industry

These innovations represent a significant shift in approach to AI model development. While many companies focus on scaling up hardware resources to achieve better performance, DeepSeek demonstrates that substantial improvements can be achieved through architectural and algorithmic innovations. Their approach not only addresses current limitations in AI deployment but also opens new possibilities for more efficient and accessible AI systems.

Comparative performance of five AI models across five benchmarks (Arena-Hard, MMLU-Pro, GPQA-Diamond, LiveCodeBench, LiveBench) as per 2024-08-31, using an unspecified metric where higher values indicate better performance.

OpenAI: The Established Leader

OpenAI, on the other hand, remains a dominant force in the AI world. They’ve spearheaded groundbreaking technologies like GPT-4 and DALL-E, and their models are widely adopted in commercial and research settings. OpenAI’s approach is primarily proprietary; while they offer access through APIs and services, the underlying models and training data are generally kept under wraps.

OpenAI’s success comes with significant funding, and a history of innovation. Their position as a leader has allowed them to set the stage for current AI trends and also to influence the direction of the field.

In recent developments within the artificial intelligence (AI) sector, OpenAI and its major backer, Microsoft, are investigating whether Chinese AI startup DeepSeek improperly utilized OpenAI’s proprietary technology to develop its own AI models. This inquiry underscores the complexities of intellectual property rights and ethical considerations in the rapidly evolving AI industry.

Background on DeepSeek and OpenAI

OpenAI, renowned for its advanced AI models like ChatGPT, has been a leader in AI research and development. DeepSeek, a relatively new entrant, has quickly gained attention with its AI assistant, R1, which has surpassed ChatGPT in downloads on Apple’s App Store. This rapid ascent has prompted scrutiny regarding the methods employed in R1’s development.

The Allegations

OpenAI suspects that DeepSeek may have engaged in unauthorized use of its data to train R1. Specifically, there are concerns that DeepSeek employed a technique known as “distillation,” where a smaller model is trained using the outputs of a larger, more advanced model. This approach can enhance performance but may infringe upon intellectual property rights if done without proper authorization.

The Investigation

Microsoft detected unusual activity involving the use of an OpenAI API and alerted OpenAI to the potential breach. The investigation is ongoing, with both companies examining whether DeepSeek bypassed restrictions to acquire large amounts of data, possibly violating OpenAI’s terms of service.

Industry Reactions

The AI community has expressed concerns over the implications of this case. Experts highlight the challenges of protecting intellectual property in an industry where rapid innovation often outpaces regulatory frameworks. The situation has also sparked discussions about the need for robust AI governance and export controls, especially considering potential military applications and competitive advantages.

Potential Consequences

If the allegations against DeepSeek are substantiated, the company could face legal actions, including lawsuits and sanctions, which may hinder its operations and reputation. For OpenAI, the case underscores the importance of implementing stringent measures to safeguard its proprietary technology and may lead to a reevaluation of its data security protocols.

Broader Implications for the AI Industry

This incident highlights the broader issue of technology replication in the AI sector. It underscores the necessity for clear guidelines and regulations to protect intellectual property while fostering innovation. The case also emphasizes the importance of international cooperation in establishing ethical standards and governance frameworks to navigate the complex landscape of AI development.

Our Expert Review on DeepSeek:

DeepSeek: A Game-Changer in Open-Source AI

The DeepSeek model is shaking up the AI landscape, showcasing impressive capabilities despite a significantly smaller budget compared to proprietary giants like OpenAI’s O1. Here’s a quick breakdown:

Key Highlights

- 💰: Cost-Effective Innovation:

- DeepSeek cost $6M to develop but rivals OpenAI’s $100M O1 model in many areas.

- Clever strategies like (probably) synthetic GPT-4.0 training data and reverse-engineering reduced costs.

- 🤖: Performance Insights:

- Strong in problem-solving and step-by-step reasoning, ideal for complex tasks.

- Slower load times and limited creative writing improvements.

- Unknown German performance

- 🌐: Open-Source Triumph:

- Open-source accessibility enables hosting without restrictions (e.g., Chinese censorship).

- Represents the closing gap between open and closed-source AI models.

- 🔗: Relevance for Content Creator:

- Creative content generation has already plateaued with GPT-4.0.

- DeepSeek’s strength lies in logical reasoning, not creativity, making it less relevant for now.

Broader Implications

- 🚀 Open-Source Power: Open-source AI is rapidly catching up, leveling the playing field.

- 📉: Market Shifts: Efficient models like DeepSeek could disrupt hardware demand (bad news for NVIDIA (&stock holders)).

- 🔎: Future Potential: Models like DeepSeek hint at a future where cost-effective AI thrives. (ca. 5% cost compared to OpenAI Models)

DeepSeek is a fascinating step forward, proving that impactful innovation doesn’t always need massive budgets. It’s an exciting development for the AI community and worth keeping on the radar.

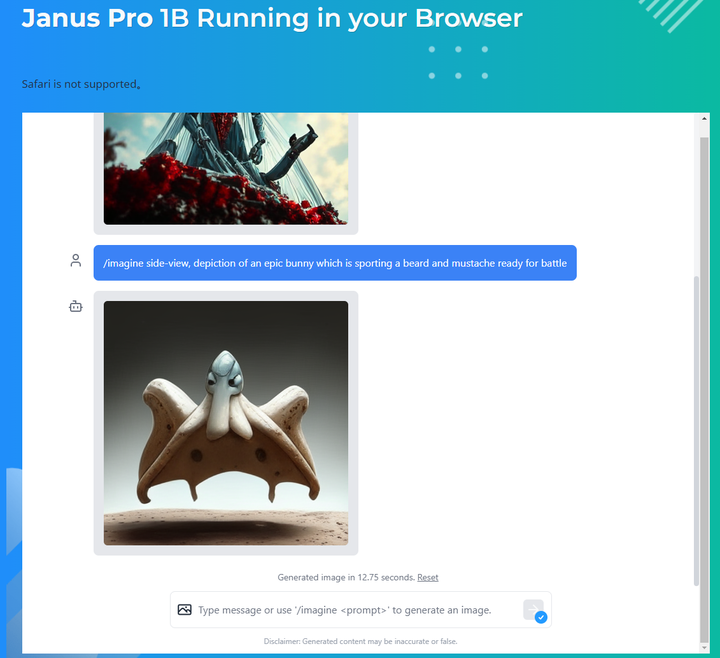

Also, in terms of AI images, DeepSeeks new image model, Janus (can run in browser) but its pretty bad. (see image)

DeepSeek vs OpenAI: Competition, Safety, and the Future of AI

The relationship between DeepSeek and OpenAI is not just about competition; it’s a catalyst for change. Here’s how:

Disruption of the Status Quo: DeepSeek’s open-source approach directly challenges the traditional model of closed-source AI development. By making its models freely available, it empowers developers and researchers worldwide, potentially accelerating innovation.

Competition Drives Innovation: The very act of two companies striving to develop more powerful and better models pushes the entire industry forward. It leads to rapid advancements, improved performance, and new approaches to AI development.

Benchmarking and Transparency: The open nature of DeepSeek’s models allows for more transparency in the evaluation of model performance. Researchers can compare DeepSeek’s models to OpenAI’s, which helps identify strengths and weaknesses and improve overall understanding.

Shifting Power Dynamics: The emergence of a strong Chinese AI company is shifting the power dynamics in the AI space. For many years the technology has been dominated by American companies, and the arrival of DeepSeek has created a competitive environment that’s a win for the field and the public in general.

Geopolitical Implications: This competition also carries geopolitical implications. It’s a reflection of the broader technological race between the US and China. The success of DeepSeek has raised national security concerns in the United States, and led to increased debate on topics such as AI export controls and data privacy.

DeepSeek: Safe to Use?

There are no direct reports suggesting that DeepSeek is unsafe to use in terms of security, privacy, or reliability. However, given the ongoing investigation by OpenAI and Microsoft regarding possible unauthorized use of OpenAI’s technology, there are a few factors to consider before using DeepSeek:

Data Privacy Concerns: DeepSeek’s privacy policy states that user data is stored on servers located in China, raising concerns about access by the Chinese government. DeepSeek collects user data, including device information, keystroke patterns, and IP addresses which could be exposed or misused. International data protection authorities are also investigating DeepSeek, especially regarding its handling of user data.

Potential for Misuse: Like any powerful AI, DeepSeek models could be used for malicious purposes such as creating misinformation or engaging in influence campaigns.

Censorship Concerns: Some users have reported DeepSeek censoring or avoiding questions on politically sensitive topics, particularly those related to China, raising concerns about bias and propaganda.

Cybersecurity: DeepSeek has experienced large-scale malicious attacks, highlighting potential vulnerabilities in their systems.

What Does This Mean for You?

Whether you’re a developer, researcher, or casual user, the competition between DeepSeek and OpenAI has important implications:

Increased Access to AI Tools: The rise of open-source models like DeepSeek will lead to broader access to powerful AI tools, which could spark innovation across various fields.

Greater Transparency: Open-source projects promote transparency and collaboration, which benefits the entire AI community.

More Choices: Users will have more options when it comes to choosing the right AI tools for their needs.

Increased Vigilance: Be cautious about data privacy, and stay informed about the potential risks associated with using AI platforms, and particularly when using newer platforms like DeepSeek.

Recommendations for Using DeepSeek:

Be cautious about the data you share: Given privacy concerns, avoid disclosing sensitive or personal information in your interactions with DeepSeek.

Stay informed: Keep up with the latest news and developments regarding DeepSeek and its safety practices.

Be aware of potential bias: Recognize that DeepSeek’s responses may be influenced by censorship or bias, particularly on topics related to China.

Consider alternatives: If data privacy is a major concern, explore other AI tools and platforms from providers with more transparent and user-friendly data policies.

Use at your own risk: Understand that using DeepSeek involves accepting potential risks related to data privacy, security and censorship.

Things to Keep an Eye On:

Model Performance: How DeepSeek’s models continue to develop and compare to OpenAI’s.

License Changes: Whether DeepSeek maintains its open-source philosophy or adjusts it.

OpenAI’s Response: How OpenAI adapts its strategies and offerings in the face of competition from open-source models.

The Broader AI Landscape: How this dynamic impacts the overall development and accessibility of AI technologies.

In summary

DeepSeek is a powerful and rapidly evolving AI platform with innovative technology, but it comes with its own set of risks related to data privacy, potential for misuse, and censorship. It’s crucial to be aware of these factors and exercise caution when using their products.