OpenAI is proud to announce the release of o3-mini, the newest and most cost-efficient model in our reasoning series. Available today in both ChatGPT and via the API, this powerful and fast model—previewed in December 2024—pushes the boundaries of what small AI models can achieve. It offers exceptional STEM capabilities, particularly in science, math, and coding, all while maintaining the low cost and reduced latency of its predecessor, o1-mini.

What Makes ChatGPT o3-mini Special?

o3-mini is optimized for science, math, and coding, making it an excellent choice for technical problem-solving. It also introduces key developer-friendly features, including:

- Structured Outputs – More precise, formatted responses

- Function Calling – Seamless integration into applications

- Batch API Support – More efficient, large-scale processing

- Customizable Reasoning Effort – Users can select low, medium, or high reasoning effort to balance speed and depth

Like other models in the o-series, Chatgpt o3-mini is purpose-built to deliver accurate, logical, and reliable responses for complex technical inquiries.

Enhanced STEM Capabilities

At its core, o3-mini is engineered for excellence in STEM fields. Whether you’re solving complex equations, debugging code, or exploring scientific theories, this model provides:

Superior STEM Reasoning:

o3-mini delivers highly accurate and clear responses for challenging STEM tasks. Early evaluations indicate that users prefer its performance—reducing major errors on real-world problems—while providing rapid, reliable outputs.Optimized for Technical Domains:

With a strong emphasis on science, math, and coding, o3-mini is designed to handle intricate technical problems. Its improved reasoning efficiency means it can “think harder” when necessary, ensuring precision without sacrificing speed.

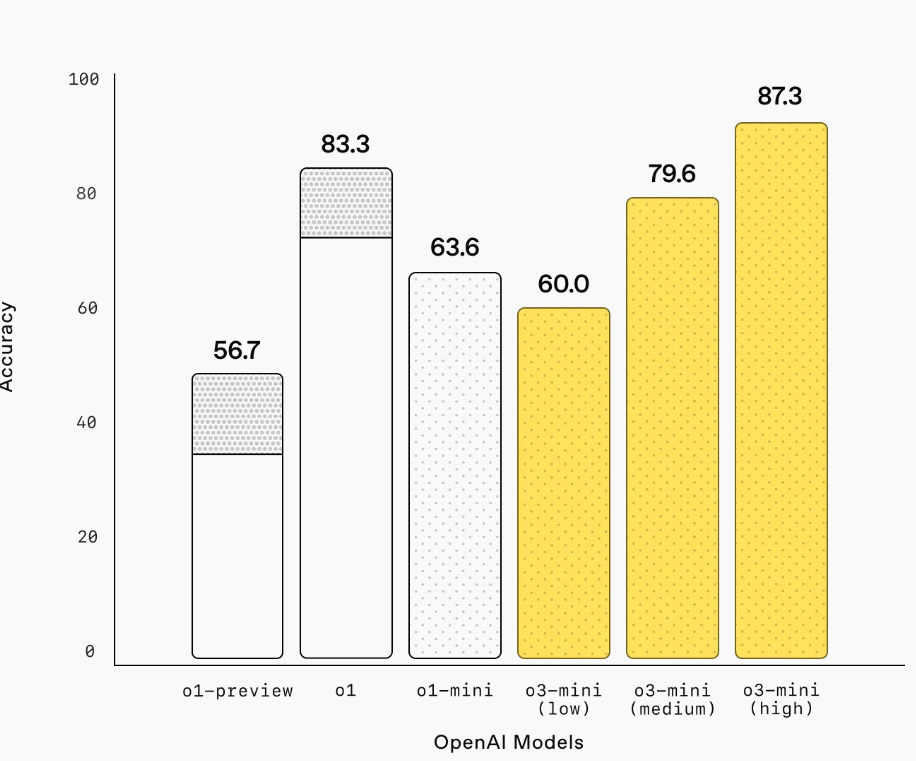

Competition Math (AIME 2024)

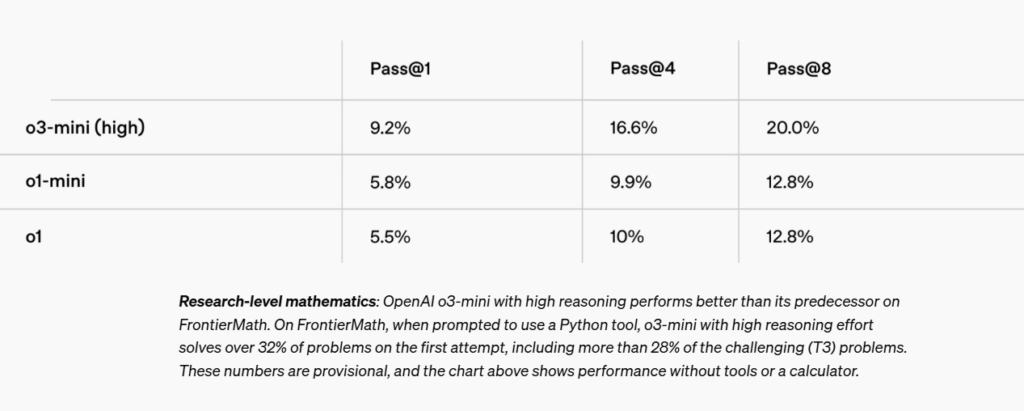

The ChatGPT o3-mini model demonstrates exceptional mathematical capabilities, particularly in competitive mathematical examinations. In the 2024 American Invitational Mathematics Examination (AIME), the o3-mini-high variant achieved an outstanding performance, scoring 87.3% and surpassing the full o1 model’s results.

The AIME examination is renowned for its rigorous problem sets that span multiple mathematical domains, including number theory, probability, algebra, and geometry. By successfully navigating these complex mathematical challenges, the o3-mini-high model underscores its sophisticated computational and analytical capabilities.

This performance highlights the model’s advanced mathematical reasoning skills and represents a significant milestone in artificial intelligence’s computational mathematics applications.

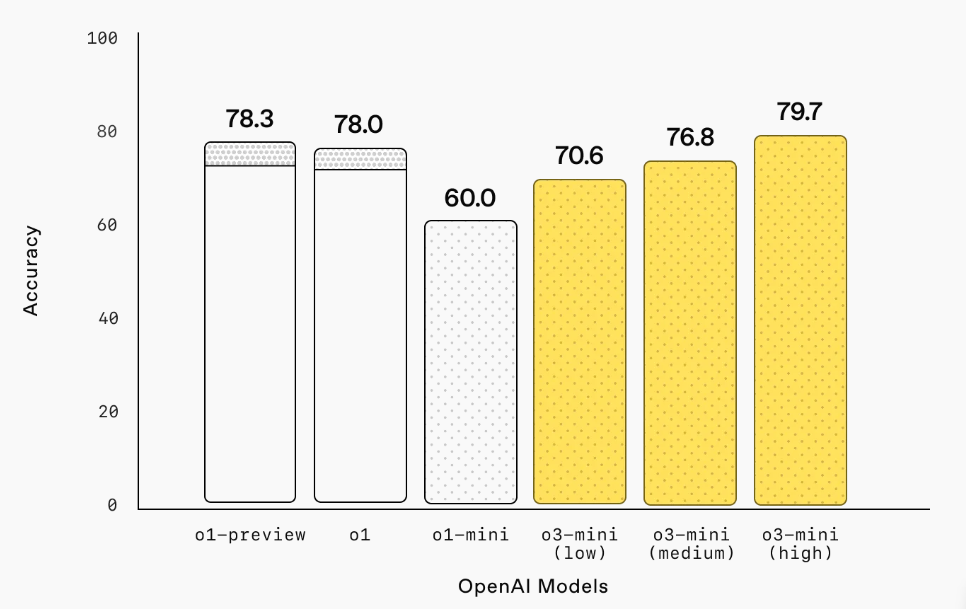

PhD-level Science Questions (GPQA Diamond)

The o3-mini-high model demonstrates exceptional performance in advanced scientific domains, significantly outperforming other AI models in specialized scientific assessments. The GPQA Diamond benchmark, a rigorous evaluation framework, provides compelling evidence of the model’s advanced scientific reasoning capabilities.

This benchmark assesses AI models through a comprehensive set of PhD-level questions spanning critical scientific disciplines, including biology, physics, and chemistry. By excelling in these sophisticated scientific domains, the o3-mini-high model proves its ability to process and analyze complex scientific content with remarkable precision and depth.

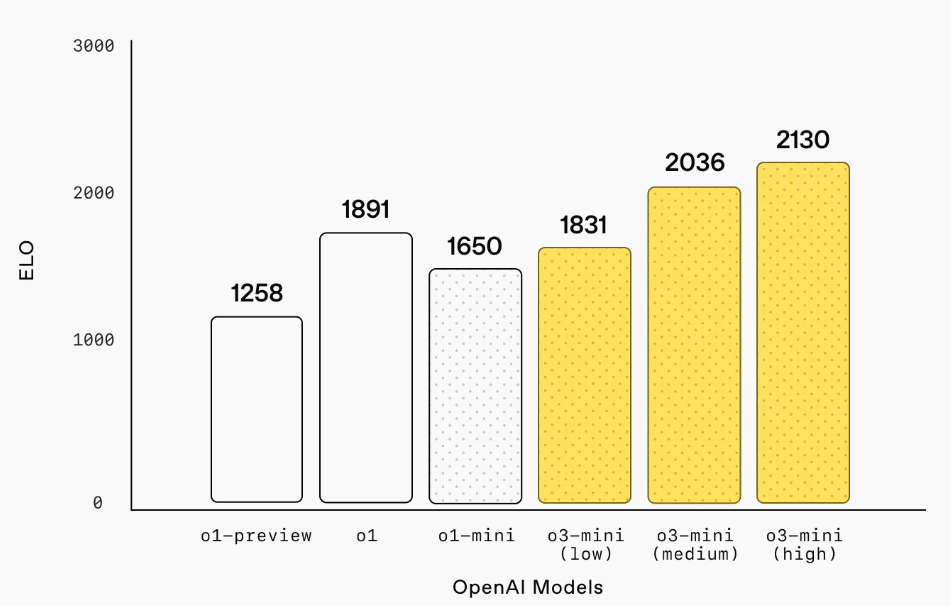

Competition Code (Codeforces)

OpenAI claims that o3-mini offers outstanding performance in coding tasks, all while maintaining low costs and fast processing speeds. Previously, Anthropic’s Claude 3.5 Sonnet was the preferred choice for programming queries.

The OpenAI o3-mini model demonstrates remarkable adaptability and performance in competitive programming environments, specifically on the Codeforces platform. By systematically adjusting reasoning effort, the model achieves progressively higher Elo ratings, consistently outperforming the o1-mini model.

Performance Dynamics:

- At medium reasoning effort, the ChatGPT o3-mini model achieves performance parity with the o1 model

- As reasoning effort increases, the o3-mini model’s Elo scores demonstrate a consistent upward trajectory

- The model’s scalable performance highlights its sophisticated algorithmic problem-solving capabilities

This performance underscores the o3-mini model’s potential for advanced computational tasks, showcasing its ability to dynamically adjust computational strategies and improve problem-solving efficiency across varying levels of complexity.

The results suggest significant advancements in AI model design, particularly in domains requiring nuanced algorithmic reasoning and adaptive computational approaches.

Developer-Focused Enhancements

Designed with developers in mind, o3-mini includes major improvements to API performance:

- Faster Processing: Lower latency means quicker response times, crucial for applications requiring real-time AI interactions.

- Higher Rate Limits: OpenAI has increased the usage limits for o3-mini, tripling the message cap for ChatGPT Plus and Team users from 50 to 150 messages per day.

- Improved Safety & Alignment: OpenAI classifies o3-mini as medium risk in Persuasion, CBRN, and Model Autonomy under its Preparedness Framework and has implemented safety mitigations to address these risks.

Additionally, o3-mini has a knowledge cutoff of October 2023, meaning its responses are informed by data available up until that point.

Streaming Support and API Integration:

Consistent with its predecessors, o3-mini supports streaming, ensuring smooth real-time data processing. It is now available via the Chat Completions API, Assistants API, and Batch API for select users in API usage tiers 3-5, making it easier than ever to integrate advanced AI into your applications.Detailed Usage Guidelines:

The OpenAI platform documentation outlines specific API usage details for o3-mini—including token limits, temperature parameters, and example scenarios—ensuring that developers have all the necessary guidelines to deploy this model effectively.

Accessibility and Performance

o3-mini is now available in ChatGPT and via API. Here’s how users can access it:

- ChatGPT Plus, Team, and Pro users can start using o3-mini immediately.

- Enterprise access is set to roll out in February.

- Free-tier users can try o3-mini by selecting the ‘Reason’ option in the message composer or regenerating a response.

For developers, o3-mini is now integrated into the Chat Completions API, Assistants API, and Batch API, making it easier to deploy AI-powered applications.

Key Differences of ChatGPT o3 mini

The key differentiator lies in its size and efficiency. While retaining a strong grasp of language and context, o3 mini requires less computational power and memory. This makes it ideal for applications where resources are limited, such as mobile devices, embedded systems, or even running locally on personal computers. Imagine having a pocket-sized language assistant capable of understanding and responding to your queries, generating creative text formats, translating languages, and answering your questions in an informative way, even if you’re offline.

Potential Applications: A World of Possibilities

The compact nature of o3 mini opens up a plethora of exciting possibilities:

- Personal AI Assistants: Imagine a truly personalized AI assistant that resides on your phone, helping you manage your schedule, write emails, and answer your questions, all while respecting your privacy as it processes information locally.

- Offline Language Tools: Travelers could benefit from offline translation apps powered by o3 mini, breaking down language barriers even in remote locations.

- Embedded Systems: Integrating o3 mini into smart devices could enable natural language control and interaction, making our homes and gadgets more intuitive.

- Educational Tools: o3 mini could power interactive learning platforms, providing personalized feedback and guidance to students.

- Accessibility: For individuals with disabilities, o3 mini could offer assistive technologies like real-time transcription, text-to-speech, and language simplification.

How does it compare to other models?

While specific benchmarks are still emerging, the core advantage of o3 mini is its efficiency. It’s designed for speed and low resource consumption. This naturally means that it might not be able to handle the most complex and demanding language tasks that larger models excel at. Think of it as a specialized tool, perfect for specific jobs, rather than a general-purpose powerhouse. It’s about finding the right balance between performance and efficiency.

ChatGPt o3 mini: Smarter AI, Safer AI

While o3-mini significantly improves STEM reasoning and developer capabilities, OpenAI has also prioritized safety:

- Advanced Safeguards: The model has undergone rigorous alignment efforts to minimize harmful outputs.

- Search Integration: o3-mini now works with search functionality, allowing users to retrieve up-to-date answers with relevant web links—a first step in integrating search across OpenAI’s reasoning models.

Conclusion

OpenAI’s o3-mini employs chain-of-thought reasoning within context, which drives robust performance across both its functional and safety benchmarks. Although these enhanced capabilities lead to notable improvements in safety outcomes, they also introduce certain risks. Within the OpenAI Preparedness Framework, our models have been designated as medium risk in areas such as Persuasion, CBRN, and Model Autonomy. Overall, both o3-mini and OpenAI o1 are classified as medium risk, and we have implemented appropriate safeguards and safety mitigations for this new family. Our approach to iterative real-world deployment reflects our conviction that engaging everyone affected by this technology is key to advancing the AI safety conversation.