Chatbots have become an essential part of customer service and communication, but are the conversations with these bots public or private? In this article, we explore the various concerns and questions surrounding chatbot privacy and offer useful tips for users and businesses to ensure their information stays secure.

As chatbots increasingly become the first point of contact between users and businesses, it’s no surprise that privacy concerns are on the rise. The smooth functioning of AI-powered chatbots relies on data feeds to craft contextual responses. Consequently, the management and storage of this data is of utmost importance in order to prevent potential misuse or unauthorized access. As a user, understanding the privacy implications of chatting with AI-based services is essential, especially given the rising concerns regarding the misuse of personal information.

AI Chatbots like ChatGPT and privacy

As AI-powered chatbots continue to evolve and find application in various industries, many people wonder about the privacy and security of their conversations. This raises important questions such as, can anyone access chatbot conversations? How secure are chatbot platforms?

Chatbot platforms like ChatGPT have implemented security measures to protect user privacy. Nevertheless, other platforms may still have security weaknesses that put user data at risk. In order to decipher the intricacies of chatbot privacy, evaluating the policies and security features of respective platforms is crucial. Clear and concise guidelines on data usage, storage, and disposal can help allay privacy concerns, while also allowing the public to make informed decisions when engaging with chatbot services.

What are the privacy concerns with chatbots?

Privacy concerns with chatbots include data storage and access, data security, and the potential for misuse of sensitive information. In some cases, users may assume that their chats are private, only to find out later that their conversations can be accessed by others. There have been instances where sensitive information was inadvertently leaked or accessed without consent, leading to potential ethical and legal repercussions. The level of privacy and security offered by chatbot platforms varies significantly, so it’s important to research and choose a reputable platform for your chatbot needs.

Does ChatGPT store user date?

It is important to note that OpenAI and the applications or platforms that use ChatGPT may store user data and input for various purposes, such as improving the user experience or providing personalized recommendations. It is always recommended to check the privacy policy of the specific application or platform to understand how user data is handled. If you have concerns about how your data is being used or stored, you should contact the application or platform provider directly.

Are there privacy concerns with ChatGPT?

ChatGPT, like any other AI model, has the potential to threaten privacy if it is not used responsibly. However, the responsibility lies with the developers and users of the model to ensure that privacy is protected. ChatGPT itself is not designed to collect or store personal information, but it can potentially reveal personal information if it is given access to sensitive data. For example, if a user inputs personal information into a prompt, ChatGPT may use that information to generate a response. To mitigate privacy concerns, developers and users should take steps to ensure that sensitive data is not inputted into prompts, and that any data that is inputted is properly encrypted and protected. Additionally, developers should implement privacy and security measures such as data anonymization, access controls, and secure storage to protect user data.

How can you tell if you are dealing with a chatbot?

Determining if you are interacting with a chatbot can be tricky, as sophisticated AI chatbots have become quite human-like in their responses. Some signs that you might be talking to a chatbot include generic or repetitive answers, unrelated responses to your questions, an inability to handle complex inquiries, and consistent, near-perfect grammar and spelling. Skillfully developed chatbots, like ChatGPT, can sometimes be indistinguishable from real human beings, but spotting chatbots in general often comes down to noticing patterns in their communication.

While most chatbots are designed to be user-friendly and improve user experiences, detecting whether you are interacting with a chatbot can be important if you have concerns about privacy or data security. One way to confirm if you are communicating with a chatbot is to ask straightforward questions about its nature or for contextual information that a human would quickly understand. A chatbot may struggle to provide relevant answers or exhibit inconsistencies in response, revealing its artificial nature. Some platforms and websites also disclose the use of chatbots, which can help you determine if you are conversing with one.

What is the legal risk for chatbots?

Legal risks associated with chatbots can include violations of privacy and data protection laws, such as GDPR in the European Union. Other potential risks involve the unauthorized disclosure of sensitive information or defamation caused by inappropriate responses generated by chatbots. Businesses that use chatbots must ensure that they are complying with applicable laws and regulations, which often involves understanding the data storage and privacy policies of the chatbot platforms they are using.

Apart from the primary legal risks, there is a growing need to ensure that chatbots function ethically and responsibly. This includes ensuring transparency in data collection and processing, fair use of data and algorithms, and the provision of equal access to services. Compliance with ethical guidelines can help businesses minimize potential legal risks, fostering consumer trust and facilitating the broader adoption of chatbot technologies. It is also essential for companies to invest in ongoing monitoring, periodic audits, and employee training to ensure chatbots remain compliant with regulations and ethical standards.

What can chatbots not do?

Despite their increasing sophistication, chatbots still have limitations. They cannot yet fully understand or replicate human emotions, engage in genuinely empathetic conversations, or provide solutions to abstract or highly complex problems. Chatbots also struggle with handling sarcasm, irony, and humor effectively. Furthermore, they are bound by the limitations of their programming and the data they have been trained on, which means they might not be able to answer every question posed to them accurately or provide personalized support. In general, chatbots are continually improving, but they still have some way to go before they can truly compete with human abilities in all aspects of communication.

The limitations of chatbots have various implications for user privacy. For example, chatbots might inadvertently misinterpret sensitive user data or fail to recognize when a user is expressing discomfort. This could lead to unintentional privacy breaches or awkward experiences. It is crucial for developers to remain aware of these limitations and focus on improving chatbot capabilities over time to minimize potential risks. Additionally, businesses using chatbot technology should offer transparency about the scope and limitations of the service so that users can make informed decisions about the types of conversations and data they are comfortable sharing.

Useful Tips

- Choose a reputable chatbot platform with a strong focus on security and privacy.

- Review the privacy policy of any chatbot provider to ensure it aligns with your privacy requirements.

- Train your chatbot to avoid collecting personally identifiable information (PII) whenever possible.

- Encrypt and protect stored data to prevent unauthorized access.

- Regularly update and audit your chatbot system to ensure it remains compliant with privacy regulations.

Implementing these tips can help businesses minimize privacy risks associated with chatbot use and ensure that they are providing a secure and trustworthy service to their users. Transparency and continuous improvement are critical components of effective chatbot privacy management. By focusing on user safety and security, businesses can build trust and foster greater user engagement with chatbot-powered services.

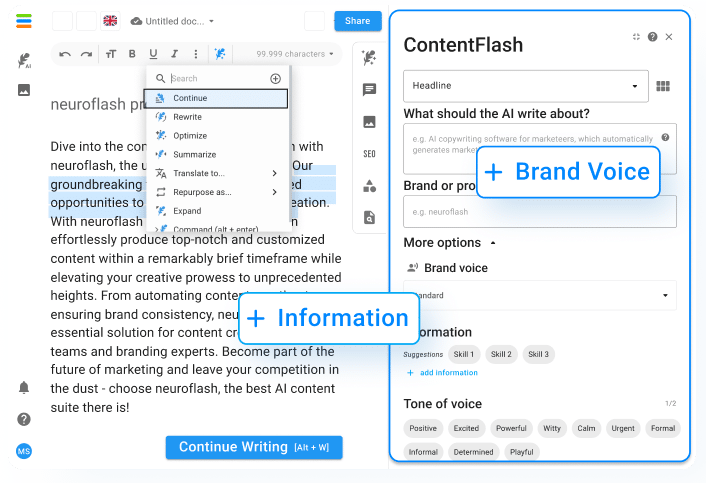

neuroflash as the chatbot you can trust

neuroflash offers a powerful ChatGPT alternative with diverse features, intuitive workflows, and smooth integration with existing applications. Other than ChatGPT, neuroflash does not store user data or conversations, but instead provides community prompts and templates that the user can freely decide to share. This was useful and efficient prompts for the AI can be shared and rated by neuroflash users to aid each other in generating the best possible output.

To ensure privacy and security for your chatbot system, sign up with neuroflash for a reliable and secure solution.

Conclusion

In conclusion, chatbot conversations can be either public or private, depending on the platform or application being used. It is crucial for users and businesses to understand the potential privacy concerns associated with using chatbots and take necessary precautions to safeguard their sensitive data. As AI and chatbot technologies continue to evolve, it is essential for the industry to maintain a strong focus on security and privacy to ensure user trust.