Since the release of OpenAI GPT-3 in June 2020, it is clear that the NLP (natural language processing) world is undergoing rapid change right now. Expectations for the fourth prototype (GPT-4) are accordingly high and there are many speculations and predictions about the possible features and improvements. This blog post summarizes the latest rumors and predictions about the GPT-4 parameters.

GPT-4 Parameters: The facts after the release

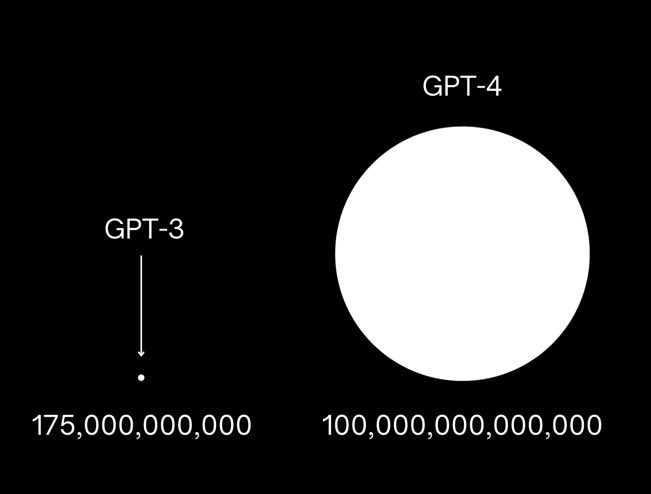

Since the release of GPT-4, no information has yet been provided on the parameters used in GPT-4. However, there is speculation that OpenAI has used around 100 trillion parameters for GPT-4. However, this has been denied by OpenAI CEO Sam Altman. But since GPT-3 has 175 billion parameters, we can expect a higher number for the new language model GPT-4.

Overview of GPT-4 parameters: rumors and forecasts

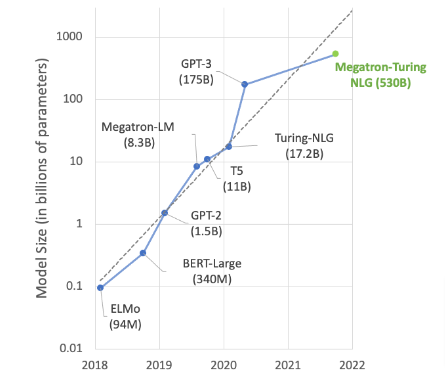

Let’s take a look at some facts and figures to understand what we should expect from GPT-4. One of the most important indicators of the potential capabilities of GPT-4 is the number of parameters. A plot of the number of parameters for AI models over the last five years shows a clear trend line with exponential growth. In 2019, Open AI released GPT-2 with 1.5 billion parameters, and followed up a little more than a year later with GPT-3, which contained just over 100 times as many parameters. This suggests that GPT-4 could be in the range of 1 trillion to 10 trillion, perhaps even 20 trillion.

Like any other online service, ChatGPT requires servers for its operation. Given the high volume of traffic, they tend to become overloaded. Due to the high volume of users, the servers cannot process all requests from a ChatGPT user. But what can you do in case of such an error message?

However, there are two rumors circulating about the number of parameters of GPT-4. One rumor says that GPT-4 is not much bigger than GPT-3, the other that it has 100 trillion parameters. It’s hard to tell which rumor is true, but based on the trend line, GPT-4 should be somewhere above a trillion.

GPT-4 parameters rumors and forecasts - What to consider?

OpenAI have been working on GPT-4 for the last few years and have continued to collect data with the release of ChatGPT, so it is possible that they have skipped a generation and are approaching a 1000-fold increase in parameters.

Another factor to consider is the small number of parameters. The human brain is incredibly sparsely populated, with most connections being local. The same parsimony is applied to AI models, and it is possible that GPT-4 will be a sparse model with mostly local connections.

So, it is important to note that all this information is based on rumors and predictions and there is no official confirmation from OpenAI about GPT-4 capabilities. However, based on the trend line and the advances in AI technology, it is safe to say that GPT-4 will be a significant improvement over its predecessor. We’ll have to wait and see what OpenAI officially reveals about GPT-4, but until then, we can speculate about its potential capabilities.

What would the GPT-4 parameters forecasts mean?

What does this mean for GPT-4? If we were to take the predictions and rumors for the GPT-4 parameters as true, then the following would result for GPT-4:

- If it does indeed have a parameter count of 100 trillion, it is likely that it will be a sparse model. This would make it much faster and require less memory, since the tensors would be much smaller. This would also help with scaling, as a jump from 175 billion parameters to 100 trillion parameters would not scale well without using sparse networks.

- Another limitation to consider is the memory requirements for GPT-4. GPT-3 currently requires 700 gigabytes of V-RAM, and if GPT-4 has a thousand times the number of parameters, it would require 700 terabytes of V-RAM. This would be a significant investment for OpenAI and would likely require the use of sparse networks to reduce the amount of memory required.

- It is also possible that GPT-4 is not much larger than GPT-3 and is in the range of 1 trillion or 10 trillion parameters. In this case, it would still benefit from the use of sparse nets. This may also explain why the GPT-4 took two years or more to develop, as it may have required a change from dense to sparse networks and the development of new training algorithms.

- The use of sparse networks could also lead to a change in architecture, similar to Google Switch, which activates only what is needed. This could mean that the GPT-4 has a neuromorphic architecture, where only certain parts of the network are activated when needed. This is comparable to the way the human brain works, where only certain regions light up when certain tasks are performed.

In conclusion, while we can only speculate on the capabilities and architecture of GPT-4, it is likely to be a sparse model with a parameter count in the range of 1 trillion to 10 trillion. Using sparse meshes would solve the scaling problem and reduce the amount of memory required.

GPT-4 vs. GPT-3

There are several expert opinions on what to expect from GPT-4 compared to GPT-3. For example, Dr. Jochen Kopp, professor of artificial intelligence at the University of Trier, says, “GPT-4 is suitable for use cases that require strong language processing and understanding.” This is an indication of GPT-4’s ability to solve many tasks with high precision.

Another positive feature of GPT-4 is its ability to process data sets more efficiently than its predecessor and thus to solve more complex tasks. This also means that larger data sets can be processed with GPT-4 than with GPT-3 and thus better results can be achieved.

So, all in all, it can be said that the new GPT-4 parameter makes great progress compared to its predecessor and thus has a versatile application in the future. However, whether it is ultimately better than its predecessor or not remains to be seen and depends on the application. We will have to wait and watch the evolution of machine learning to find out which parameter will bring the most success in the end!

Want an exact comparison between GPT-4 vs. GPT-3? Then click here!

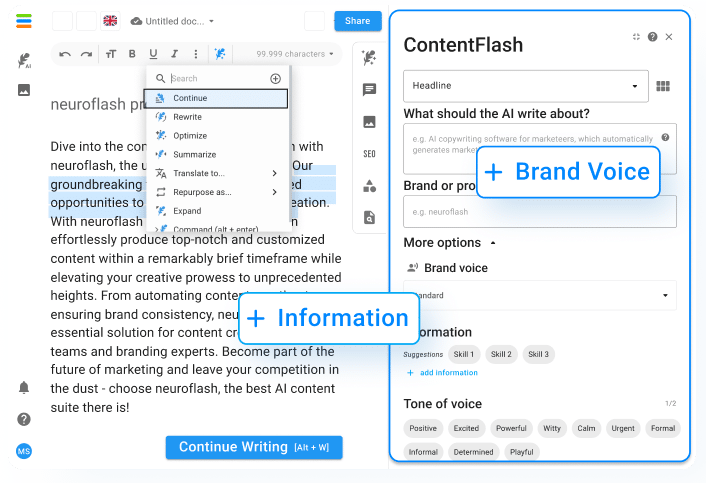

neuroflash as an example for GPT-4 applications

neuroflash combines both GPT-3 and GPT-4 in many applications such as content creation, AI chat, answering questions and much more. In doing so, neuroflash allows its users to have various texts and documents created based on a short briefing. With over 100 different text types, neuroflash AI can generate texts for any purpose. For example, if you want to create a product description with neuroflash, you only have to briefly describe your product to the AI and the magic pen does the rest:

neuroflash can also help with more creative tasks. If you want to write a story, for example, this is also possible:

Finally, you can also use neuroflash as a ChatGPT alternative and communicate directly with our AI. We show you in a short video how it works:

Moreover, neuroflash can be used to generate more than just texts. Other functions, such as an SEO analysis for written texts and an AI image generator offer additional added value.

Would you also like to benefit from the power of GPT-3 plus additional functions? Then try neuroflash yourself and create a free account!

Conclusion

In summary, GPT-4 and other large language models have the potential to revolutionize the field of AI. OpenAI has not yet confirmed how exactly the GPT-4 parameters will turn out, so we can only speculate. In this context, rumors and forecasts are interesting topics, but one should be careful when relying on them. You have to have a critical mindset and gather information from multiple sources before coming to a conclusion.