We have already written a couple of articles on artificial intelligence on our blog. For example, we’ve written about how GPT-3 works and how text generators can improve your content. But now a new innovation in the world of AI is causing excitement: the text to image generator DALL-E 2. What is DALL-E 2 about? How does this new technology work? And how might you be able to use it for yourself soon? You will learn the answer to these questions and much more in this article!

What is DALL-E 2?

DALL-E 2 is the new revolutionary text to image generator from OpenAI. It allows users to create images based on text prompts. This generator uses an artificial intelligence called GPT-3, which is able to understand the meaning of input words (natural language inputs) and render them into images. By using this generator, users can turn their own creative ideas into vivid pictures.

In doing so, DALL-E 2 can create images based on realistic objects or interpret text inputs that don’t actually exist in reality. For example, if you want to generate a realistic scene, this is no problem for DALL-E 2:

Do you want to create a propaganda poster of Napoleon Bonaparte as a cat with a piece of cheese in his hand instead? Then DALL-E 2 can help you with that too:

The fascinating thing about DALL-E 2 is that this text to image generator is a relatively new technology, which was only announced in April 2022. DALL-E 2 builds on its predecessor, DALL-E, which was released in January 2021 and can be used to generate photorealistic images from text prompts. Therefore, it is interesting to learn more about the technology behind DALL-E 2.

How does DALL-E 2 work?

The DALL-E 2 text to image generator uses natural language processing and artificial intelligence to take the information from a text prompt and convert it into a variety of images. In doing so, DALL-E 2 can control various attributes in an image just like a photo editing software would. For example, the text to image generator can change objects or artistic styles in an image. But how does DALL-E 2 manage to gain and implement this understanding of images? The answer to this is actually quite complicated, nevertheless I have read up on the subject for this blog entry and will try to explain it as best I can.

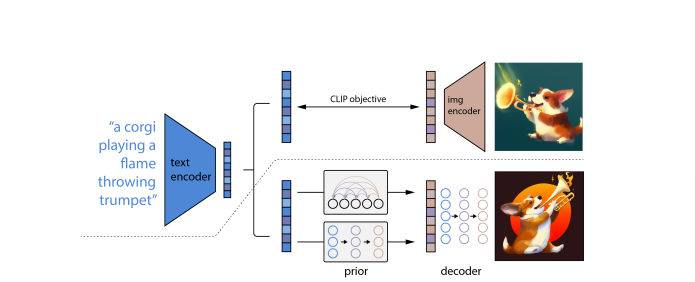

First of all, the artificial intelligence has to be trained. Deep Learning is used to teach the AI which connections it needs to make in order to generate the final product. For this learning process, DALL-E 2 uses the already existing technology of CLIP (Contrastive Language-Image Pre-training), which was also developed by OpenAI. CLIP manages to find matching text descriptions for an image based on text-image pairs on the internet. The process of DALL-E 2 thus consists of two stages:

In the upper part of the image you can see the AI training process of CLIP. DALL-E 2 uses the CLIP model to encode text-image pairs and produce a so-called latent code.

In the lower part of the image the second step is explained, where the text prompt is converted to a new image. In the second step, the latent code of the text-image pairs is taken and convertred by a so-called prior. After that, a generator called a decoder is used to create new variations of the image that match the text prompt.

So the new image variation is created in a few steps:

- First, you enter a text prompt into the text encoder. The text encoder is trained by the CLIP model to encode text-image pairs.

- Next, a so-called prior is used to establish a link between the CLIP text embedding (based on the text prompt) and a CLIP image embedding that reflects the information from the text prompt.

- Finally, a decoder is used to generate new image variations that visually represent the text prompt.

This allows you to create a variety of different images with different text inputs:

The technology behind DALL-E 2 is quite complicated and since I am unfortunately not a rocket scientist or expert in the field of AI, this explanation is also quite simplified. In fact, no one knows exactly why such generators work so well or what the artificial intelligence learns in the end. There is no fundamental theory for the phenomenon of deep learning that can explain everything. The networks used by AIs are too large and too complicated for us humans to fully understand with our current knowledge. All we know at the moment is that DALL-E 2 can use Deep Learning to understand not only individual objects, but also the relationship between those objects.

What's new with DALL-E 2?

As already mentioned, the DALL-E 2 image to text generator is the successor to DALL-E. This naturally raises the question of what is new in DALL-E 2 and what the technology can do. The answer is that DALL-E 2 provides many new functions and improvements:

- The DALL-E 2 Image to text generator creates higher quality images. DALL-E 2 is based on a 3.5 billion parameter model and uses another 1.5 billion parameter model to maximize the resolution of the digitally created images. DALL-E 2 is also faster than its predecessor when it comes to processing images.

- DALL-E 2 generates more realistic images. The images produced by DALL-E 2 are more multi-faceted and have more complex backgrounds and more realistic lighting conditions and reflections. This puts the final products of DALL-E 2 far ahead of its predecessor’s images, since DALL-E could only create catoon-like images that often had plain backgrounds.

- A revolutionary new feature of DALL-E 2 is also a function called Inpainting. With this feature, the DALL-E 2 text to image generator can perform various photo editing processes on an image. Through text input, the user can specify the changes and then select a specific area in the image to edit. For example, DALL-E 2 can be used to add objects to a specific area of the image, with shadows, reflections and textures already taken into consideration by artificial intelligence.

- The DALL-E 2 text to image generator has a better understanding of local scenes. DALL-E 2 can better recognize objects in an image and their relationship to each other. The program recognizes why certain pixels have a certain color and can assign this to objects in the image. For example, DALL-E 2 realizes that the ground in the bottom image reflects and when an object is added, a reflection of that object is automatically added.

- DALL-E 2 has a better understanding of Global Scenes. The text image generator understands what is happening in an image and retains important objects specified in the text input when creating new variations. This may sound simple and obvious, but it is a very complex process for a machine that only recognizes different colored pixels.

- The DALL-E 2 text to image generator can be used to create different variations of an image in different styles. DALL-E 2 is able to reproduce images in different styles. Thereby the generated image can represent an impressionistic version of the original:

- Alternatively, DALL-E 2 can remain largely faithful to the original, creating only small minor changes in style, such as the angle of the object:

- Finally, DALL-E 2 lets you add another image to the original and the artificial intelligence will combine the images and create a new variation out of them for you.

- The DALL-E 2 image to text generator is more accurate and can better separate image categories. During the development of DALL-E 2, it was discovered that the algorithm was prone to incorrect connections. For example, when the system was trained with an image of an apple that was labeled as an orange, the artificial intelligence was manipulated and the result was skewed. However, this problem was fixed in DALL-E 2.

The possible disadvantages of the DALL-E 2 text to image generator

Up to this point, the technology of the DALL-E 2 text to image generator sounds very convincing. Nevertheless, such technology also has disadvantages and users have to be prepared for the fact that not all problems have been solved yet:

- The assignment of physical attributes is not always correct. DALL-E 2 does not always succeed in assigning the correct physical attributes to the objects in an image. For example, if you want to generate an image that shows a red cube on top of a blue cube, the DALL-E 2 text to image generator may mix up the colors of the cubes:

- Another major drawback that the DALL-E 2 text to image generator has so far is that it cannot generate intelligible text in its images. For example, if you want to create a sign with the word Deep Learning, these are the results:

- The DALL-E 2 text to image generator further has difficulty creating details within complex scenes. For example, if one wants to create an image of the Times Square in New York City, a suitable image is generated, but the iconic advertising screens have no discernible details:

- A big aspect of DALL-E 2 is that the artificial intelligence is trained with data from the internet. As we all know, the internet is not always the best place to gather one’s information. Therefore, the images generated by DALL-E 2 are subject to bias and sometimes enforce stereotypes. For example, if one wants to generate images of construction workers, only images with male workers are generated. If one changes the occupation from construction worker to stewardess, only women are displayed:

- This artificial intelligence bias means that content can be monotonous or even problematic. Generated images can be biased towards topics such as nationality, skin color, sexuality, gender, and religion. If one wants to create an image of a wedding, a heteronormative image of a traditional Christian wedding with a white couple and white wedding guests is generated:

- Since DALL-E 2 is still a relatively new technology, it only works in English so far. Those who are not familiar with the English language will have difficulties creating text prompts and won’t be able to use the program to its full potential.

What dangers could arise from DALL-E 2?

Unfortunately, it is often the case that innovative technologies such as DALL-E 2 also cause some dangers. Especially the possible misuse of the technology is one of the biggest concerns for the developers, which is why DALL-E 2 is not open source technology at this point and can only be used via an invitation from the developers. We can understand that you want to get your hands on this great new technology as soon as possible, just like we do, but as it stands, the waiting list for users is still very long:

However, there is a reason for the long wait. Existing technologies like Deep Fakes have shown that programs that can be used to manipulate images can also be abused. For example, they can be used to create fake images that harm other people.

The DALL-E 2 text to image generator has therefore implemented some safeguards to help prevent any misuse. Input filters are designed to prevent people from creating certain types of harmful content (e.g. sexualized or suggestive images of children, violent images, explicit political images, etc.). All text prompts that DALL-E 2 receives must adhere to strict guidelines. To ensure that DALL-E 2 cannot be abused to create violent and hateful content, dangerous weapons have been removed from the AI database.

OpenAI has announced that the DALL-E 2 text to image generator will eventually be available as an open source version for all users, but the developers are aware of their responsibility. Therefore, OpenAI prefers to proceed with caution in the case of DALL-E 2 until all threats have been eliminated.

We tested DALL-E 2!

neuroflash is one of the lucky users who have received a test account for DALL-E 2 and can use it to generate up to fifty text prompts a day free of charge. Of course, I wasted no time putting the new technology to the test and I am really excited about it! The variety of images you can generate with DALL-E 2 is amazing. You can choose different styles and also add context to the generated images. For example, if you want to create an album cover, all you have to do is add “album cover art” to the prompt:

A big question that I had about DALL-E 2 is whether it is possible to create images of famous people. Unfortunately, it is not possible to create realistic images of famous people (due to the risk of fake images). However, DALL-E 2 can still generate pictures of people that reflect the characteristics of the famous person so that there is a resemblance. For example, here is a picture of Oprah fleeing from a dinosaur during a fire:

Or here is a picture of Taylor Swift dancing with an octopus:

Additionally, you can edit the generated images afterwards by deleting a part of the image and then describing the desired new image. You can also create variations of a generated image to explore even more options. However, details may be lost in the process. For example, in this variation of the Taylor Swift image, the octopus has been unintentionally replaced by a snake:

A pleasant surprise was that texts in the generated images are more accurate and error-free than expected. As long as you use simple words, the AI can generate them correctly for the most part:

In general, you have to make sure that the text prompts you enter are detailed and accurate. For example, if you want to generate a boxing match between a penguin and a seal and you enter the prompt “box fight,” you will get this result:

If you enter the term “boxing match” instead, you will get the desired picture:

All in all, it can be said that DALL-E 2 is an amazing new technology that sets no limits to human creativity. I am sure that once DALL-E 2 is released, many people will be able to use the technology in many different ways.

Why use DALL-E 2?

Of course, DALL-E 2 isn’t a purely dangerous technology, as it also offers great new possibilities! The DALL-E 2 text to image generator is a great new technology that can be used in a variety of ways.

Our hope is that DALL·E 2 will empower people to express themselves creatively. DALL·E 2 also helps us understand how advanced AI systems see and understand our world, which is critical to our mission of creating AI that benefits humanity.

Open-AI

With DALL-E 2 you can effectively create unique and creative images. You don’t need photo editing skills or a strong sense of art. Even knowledge of photo editing softwares (e.g. Photoshop) is no longer necessary in order to edit an image.

Additionally, the DALL-E 2 text to image generator doesn’t just offer high quality picture, but the technology also works very fast. In just a few minutes, you can generate new images that would have taken a human being hours to create. Due to the variety of options, there are no limits to your creativity. On the contrary! DALL-E 2 will challenge and expand people’s creativity.

Finally, the DALL-E 2 text to image generator is a great example of how artificial intelligence continues to evolve. In the future, the images generated by DALL-E 2 can show us whether the system actually understands human thinking or whether it just mimics what we teach it.

Bottom line is: We are definitely excited to see how the DALL-E 2 text to image generator will develop and can’t wait to work with DALL-E 2 ourselves someday! However, if you don’t want to wait that long, you can instead enjoy the benefits of AI that are already on the market. For example, you an use the neuroflash text generator and have an artificial intelligence generate up to 2,000 words for you for free, in over 50 different text types!

Generate unique AI images with neuroflash

With the ability to generate images from text, the potential of artificial intelligence as a resource becomes clear. This is great progress achieved thanks to modern technology. That’s why neuroflash now combines the #1 German-language text generator with a new feature, text to image generation. This makes neuroflash the first company in the DACH region to offer its customers the opportunity to try AI image generation for themselves for free.

How can you make money with neuroflash’s AI generated images and use it for your business?

- Low-content books

- Covers for books, songs, comics, e-books, …

- Illustrations for bedtime stories, books, comics…

- Print on demand images or post cards

- Easy stock images for blogs (e.g. food blogs)

- NFTs

- Presentations & Slide decks

- Images for social media posts, Newsletters

- Inspirations for landingpage designs, product designs

Tip: Combine neuroflash with Photoshop or other programs:

- Use Photoshop and enlarge our images from 72 dpi to 300 dpi with Preserve Details 2.0.

- In the latest version of Photoshop (Beta) there is a “Photo Recovery” feature under “Neural Filters” that usually improves the appearance of the eyes and other odd facial features.

- Then do some basic curve and color corrections and brighten the eyes a bit.